Docker Tutorial

Docker in Production: Your journey starts here

Topics

| Getting Started | Prepare your local environment | 15 minutes |

| Part 1 | Introduction | 30 minutes |

| Part 2 | The basics | 45 minutes |

| Part 3 | Beyond the basics | 60 minutes |

| Part 4 | Platforms | 15 minutes |

| Part 5 | Next steps, future trends, Q&A | 10 minutes |

Getting Started

Prepare your local environment

Copy workshop material

- Plugin the Tutorial USB stick to your laptop

- Copy workshop folder to your laptop

- Pass on the USB stick to your neighbour

Install tutorial prerequisites

- For Non-Windows users:

- Go to Installers folder

- Install VirtualBox

- Install DockerToolbox

- For Windows users:

- Go to Installers folder

- Install VirtualBox

- Install VirtualBox extension packs

- Install DockerToolbox

- Install Chrome

- Restart machine if requested

Extract the tutorial materials

Important: If you're using Windows, unzip file from Git Bash- Unzip the file

usb-stick.zipfrom the Tutorial USB stick

- Open the Slides (

slides/index.html) in a browser tab - Open the Tutorial Steps (

Tutorial.html) in another tab

Things to note...

- If you get stuck, check with your neighbour -- have they got it working?

- If you don't finish a tutorial exercise, don't worry as each module is independent

- And you can take all this home to go over later!

- There is a lot of material to cover so please hold longer questions until the Q&A

A quick poll

- Who is new to Docker?

- Who is using it at work?

- Who is using it in production?

- Hands up if you're a dev...

- ...or an ops person...

- ...or something else?

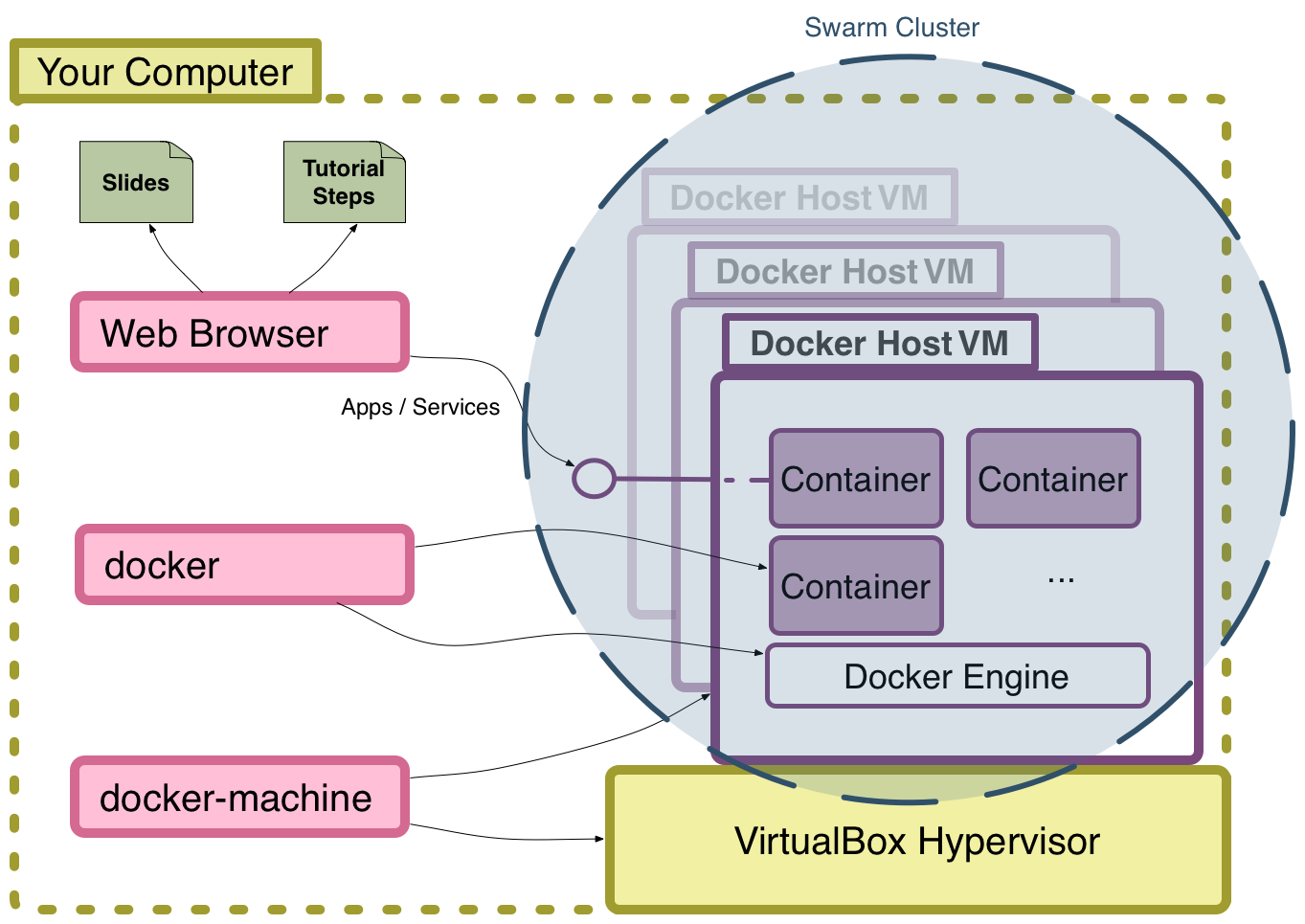

Tutorial Local Environment

Complete the Initial Setup

- View the Tutorial Steps

- You will need to complete the setup of your local environment to be able to work on the tutorial exercises

Part 1

Introduction

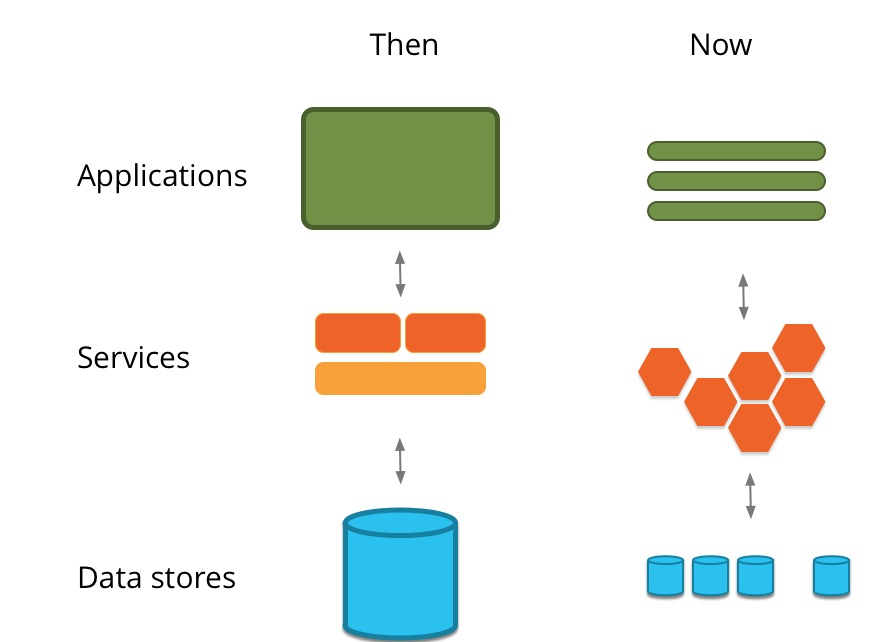

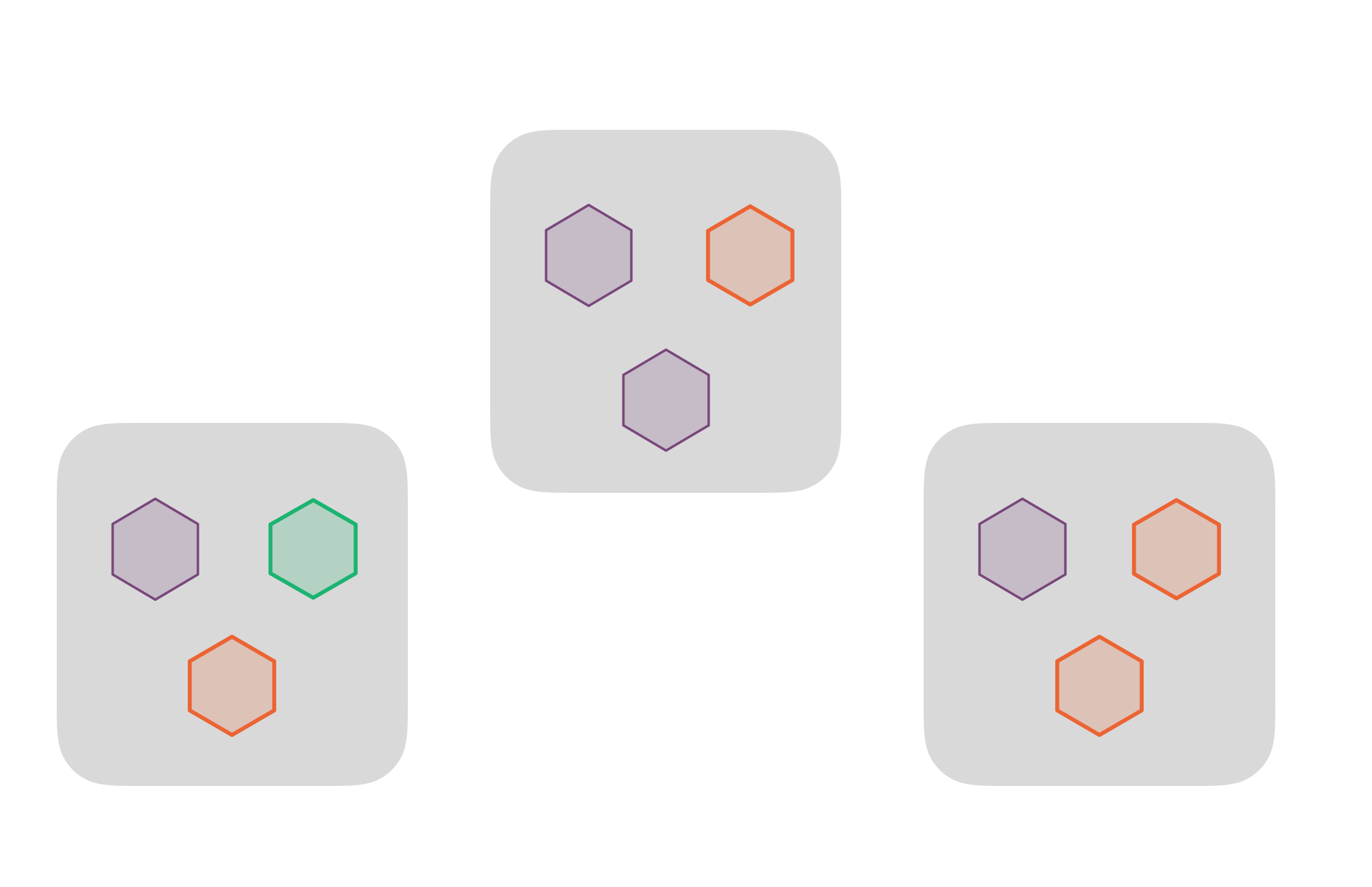

Application evolution

Single app deployment

Evolution of deployment

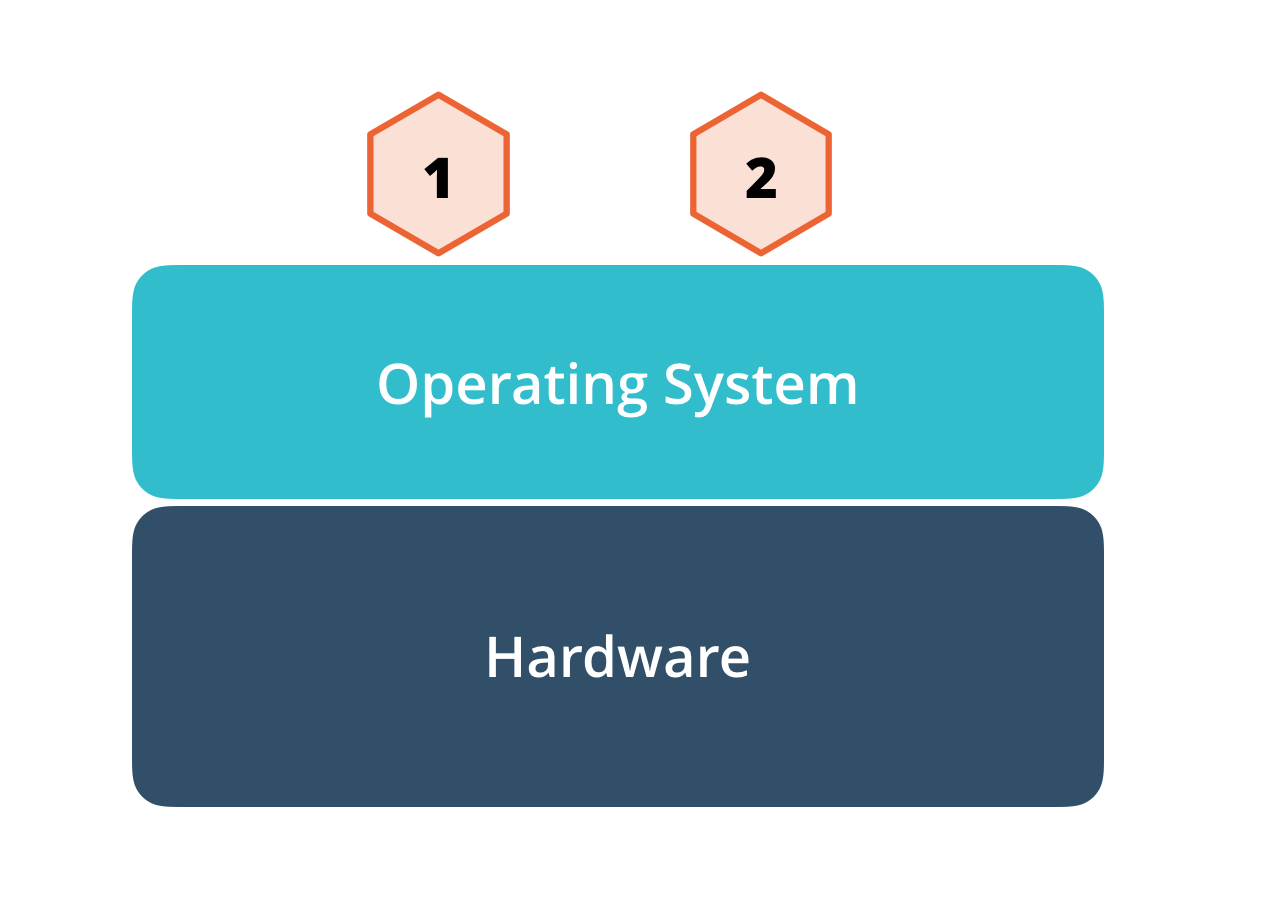

Deploy on the server

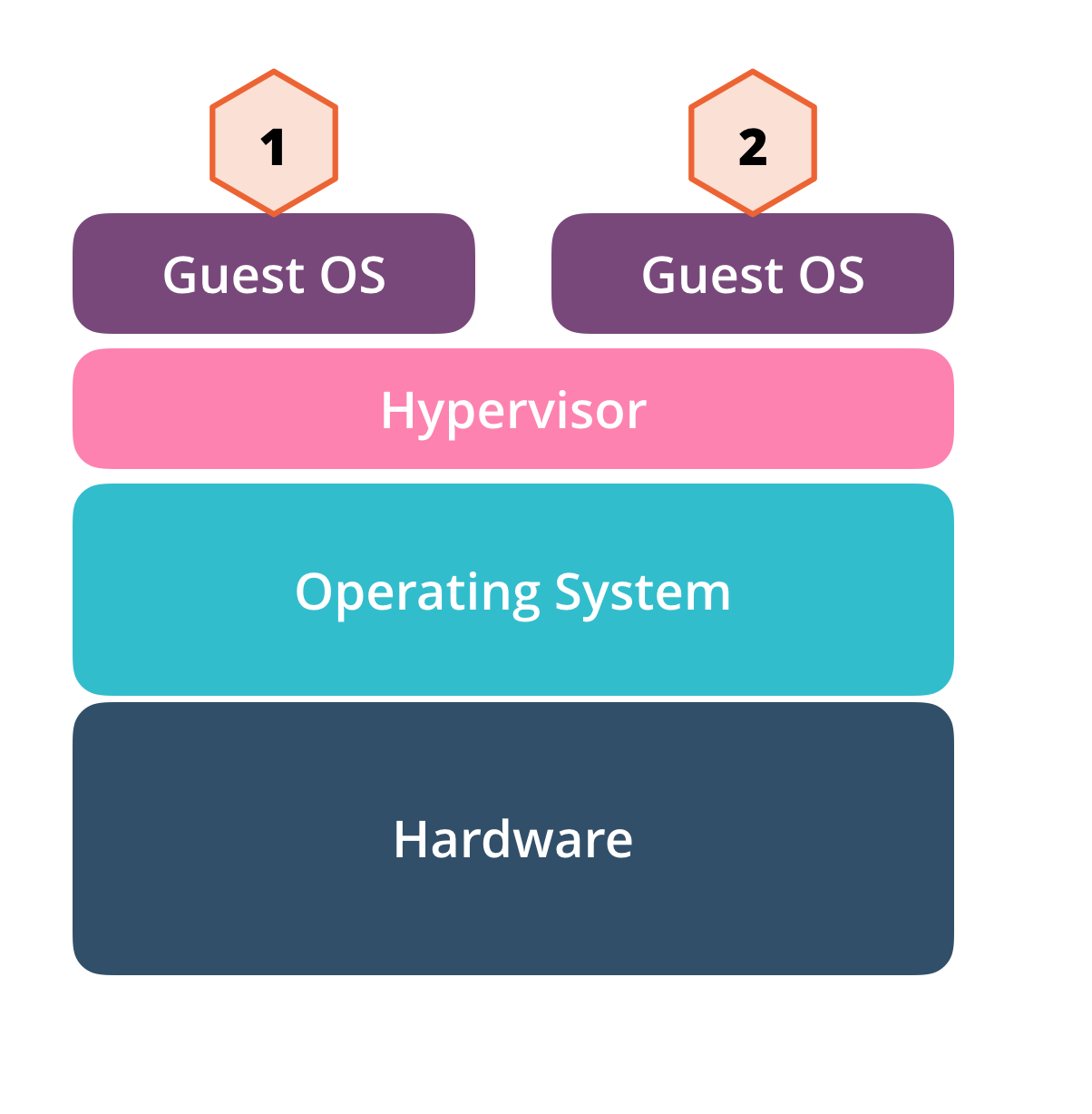

Deploy on VM

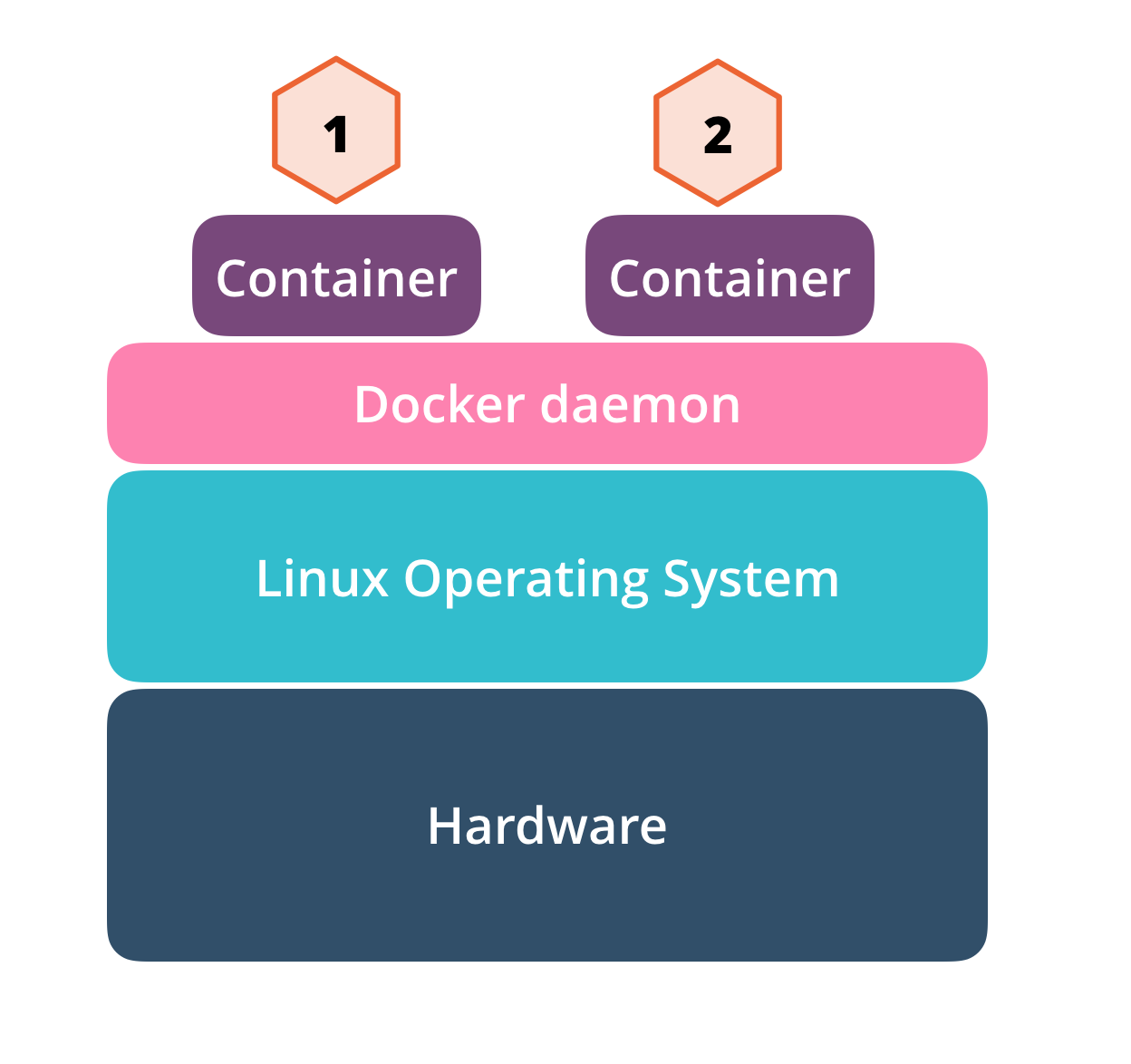

Deploy on Docker

Containers for shipping applications

What is a container?

- Collection of normal OS processes

- Running in isolation (process and resource isolation)

- Exploit features in the OS kernel to provide lightweight virtualization

- Hypervisor not required

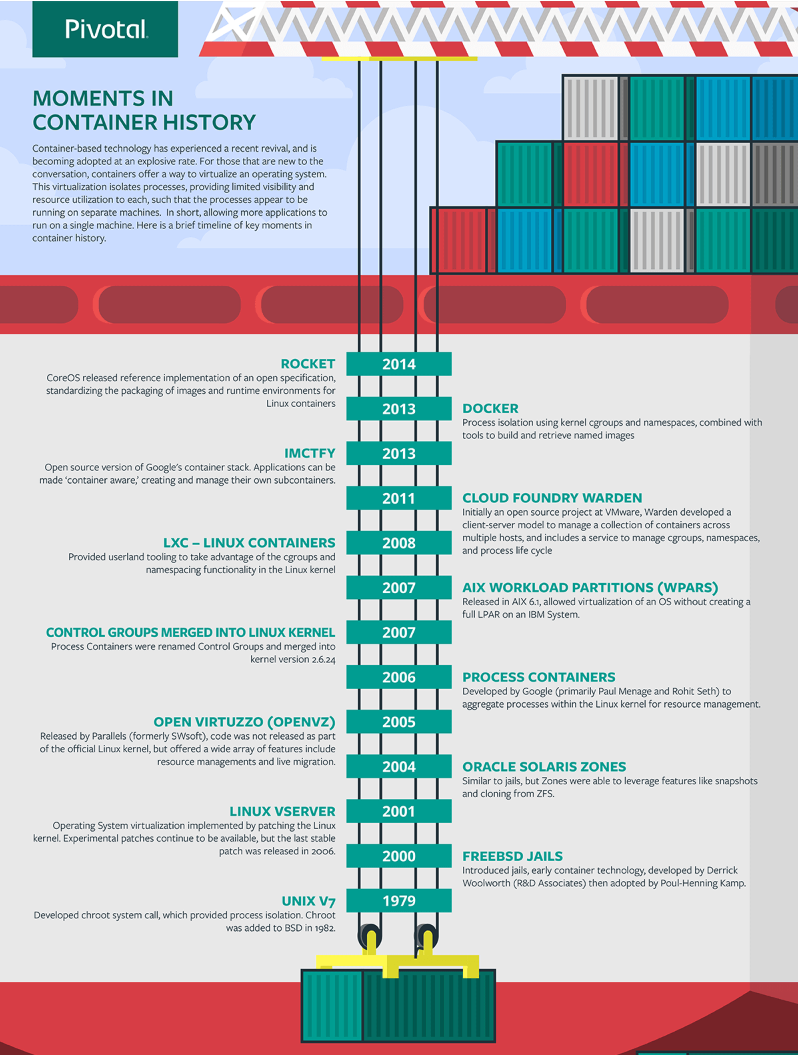

History of containers

Image source: https://content.pivotal.io/infographics/moments-in-container-history

What stopped widespread adoption?

- Not easy to use for developers and operations

- Did not have a standard runtime or image format

- Lack of standard tooling

- No standard remote APIs

- Focus was not on simplicity and user experience

So, why do we need Docker?

So, why do we need Docker?

Docker and containers

Docker is a tool that helps ...

- To build container images

- Images are portable across OS

- Images are system packages of your application

- To run / links containers together

Docker components

- Docker Engine (CE/EE) – assembled from upstream Moby components

- Docker CLI

- Docker Compose

- Docker Machine

- Docker Swarm

- Docker Registry

- Docker Cloud

- Docker Enterprise Edition (EE)

- Docker Trusted Registry

- Docker Universal Control Plane

- Certified components and support

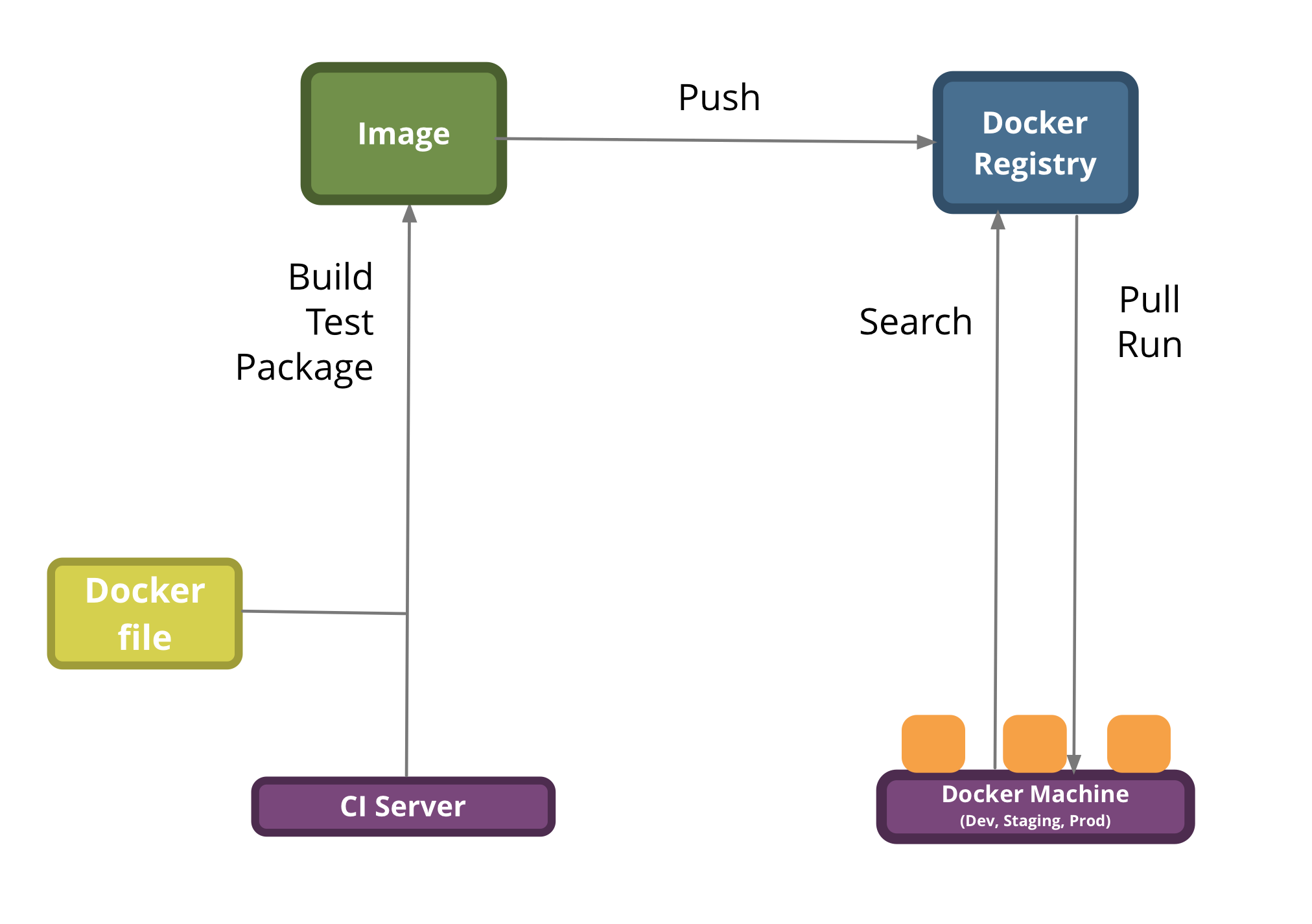

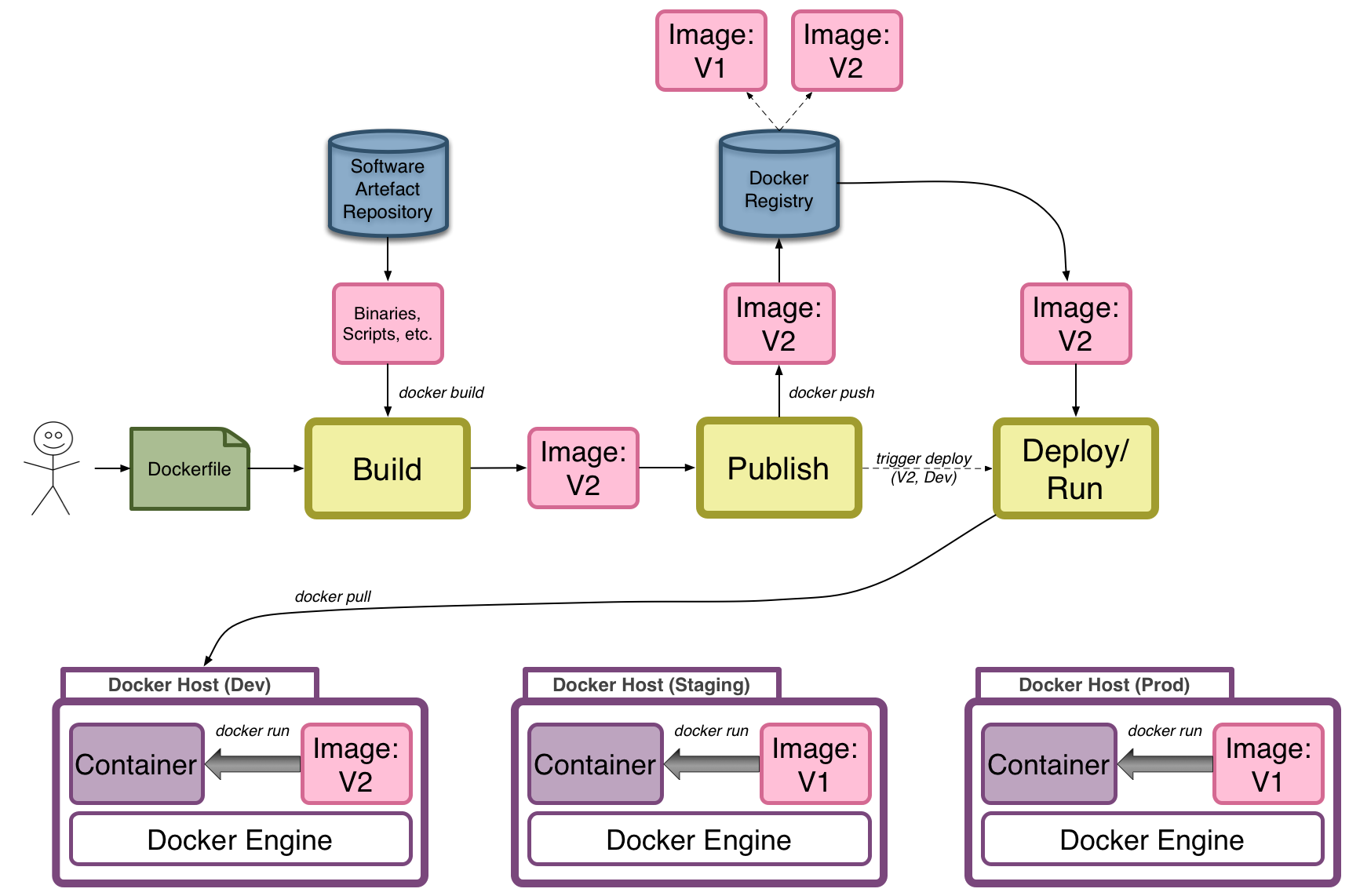

Deployment workflow

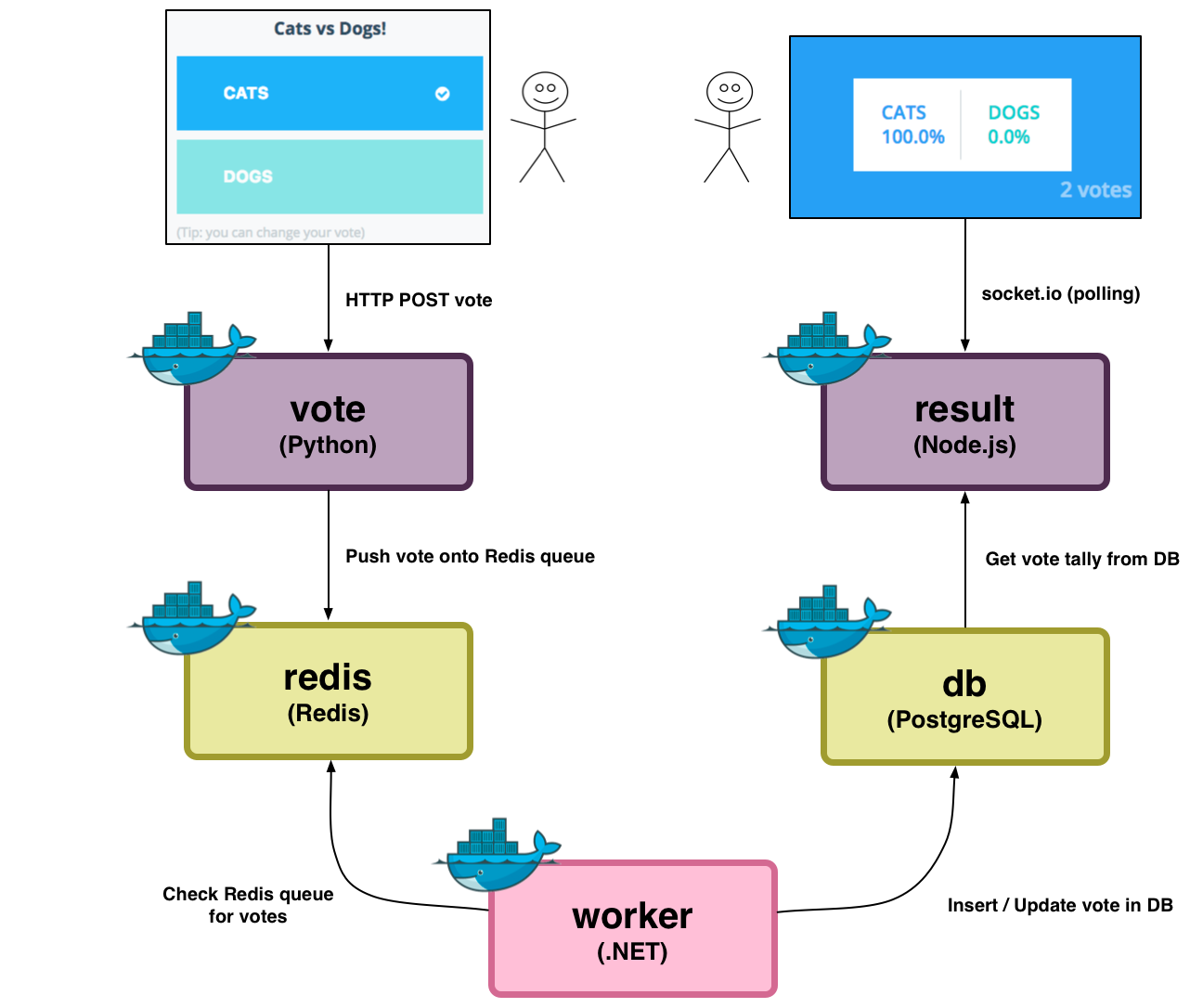

Intro to the Voting App

- In the tutorial, we will be using a sample application: Instavote

- This application is a good candidate to demo various Docker features because:

- split over multiple services

- based on a combination of different container-OSes

- different service types: front-end, back-end, a queue and a database

- ...that can be distributed over multiple Docker hosts

- and scaled up or down in the number of service instances

Voting App - Vote

Voting App - Result

Voting App Architecture

Exercise: Intro to the Voting App

- In this exercise we deploy the Voting App to a single Docker host

- Complete the exercise: 01-Intro

Part 1 - Wrap-up

- Learnt about:

- What are Containers

- Why there was a need for Docker

- What is Docker

- ...and an intro to the Voting app

Part 1 - Tips and Advice

- Docker is evolving quickly

- Docker is great at enabling finer-grained, microservice architectures...

- ...but can be good for existing apps too

- Docker is not a silver bullet for all existing problems, but gives us tools to make our job easier

Part 2

The basics

Part 2

We will explore a basic Build, Publish and Deploy/Run lifecycle using the Docker command-line client.

You will learn about:

- Building and tagging Docker images

- Docker Registries

- Running apps and services in Docker containers

- Command-line usage with the Docker client

- Basic deployment techniques

Docker Images

- Images:

- Images typically contain:

- You can find images in public registries:

- You can even create your own images!

-

Read-only templates that are used to create containers

Constructed from layered filesystems

Each layer adds to or replaces part of the filesystem below it

-

A minimal OS distribution

Dependencies

A single application or service

-

... on Docker Hub /

Docker Store

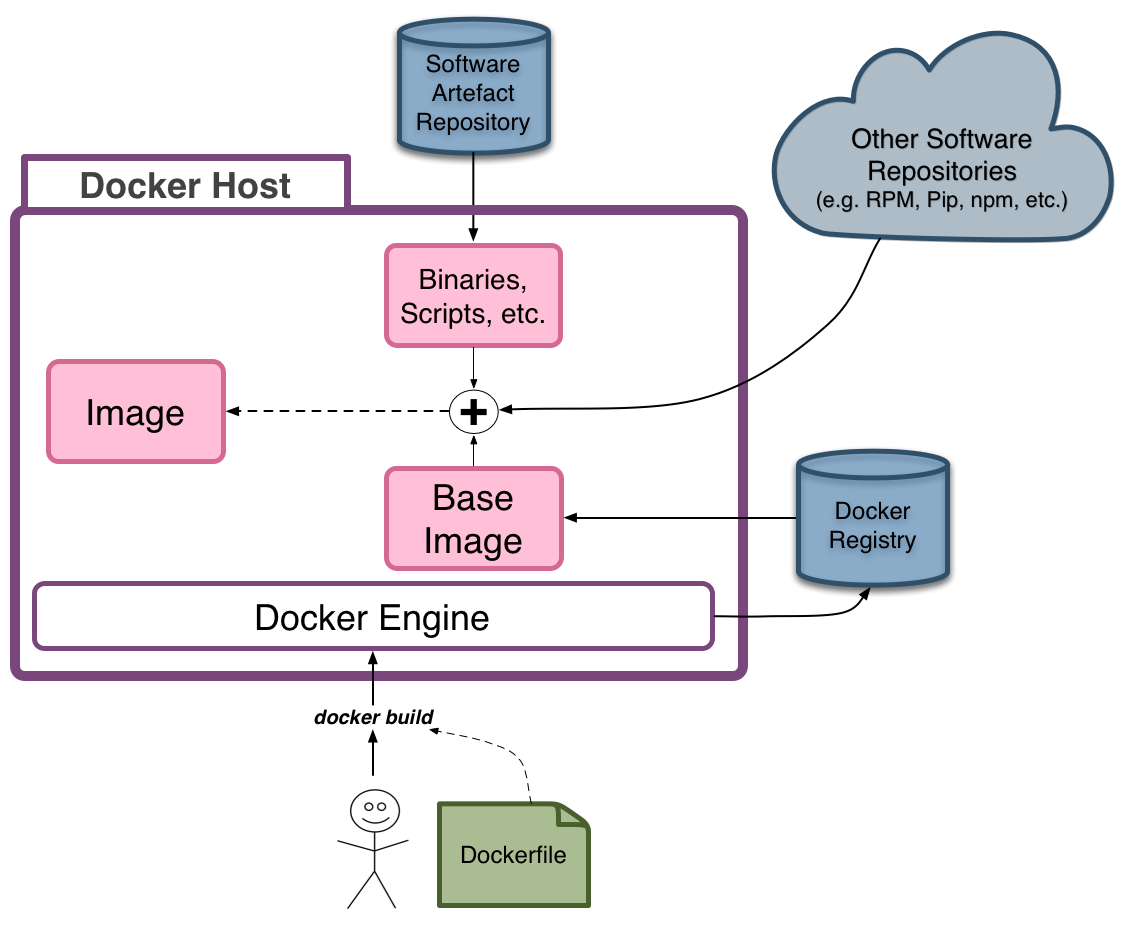

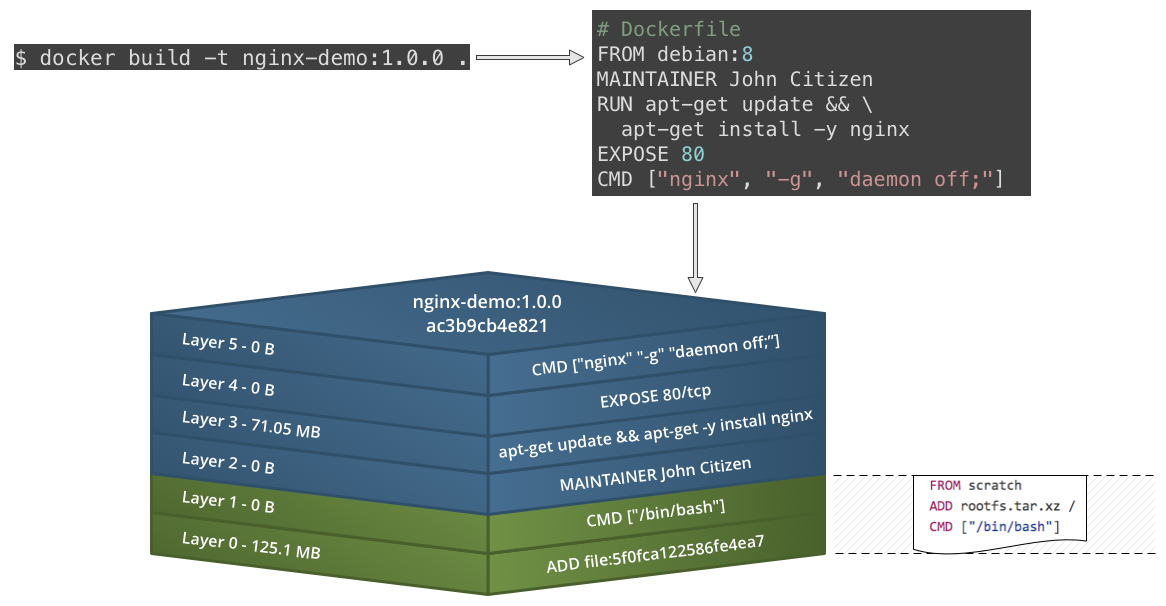

Docker Build: Creating Images

Building images with a Dockerfile

- Build Docker images using a

Dockerfileand thedocker buildcommand - Create a

Dockerfile:

FROM debian:8 MAINTAINER John Citizen RUN apt-get update && \ apt-get install -y nginx EXPOSE 80 CMD ["nginx", "-g", "daemon off;"] - Build the image:

$ docker build -t nginx-demo:1.0.0 . # Where `.` is the directory containing the `Dockerfile` - List your images:

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE nginx-demo 1.0.0 f37c98ca1a29 36 minutes ago 196.4 MB

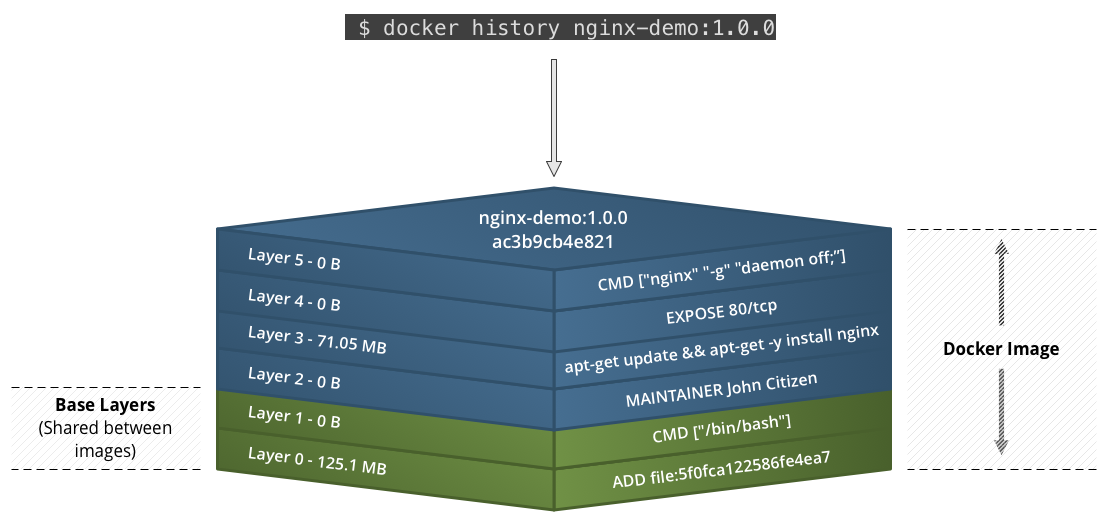

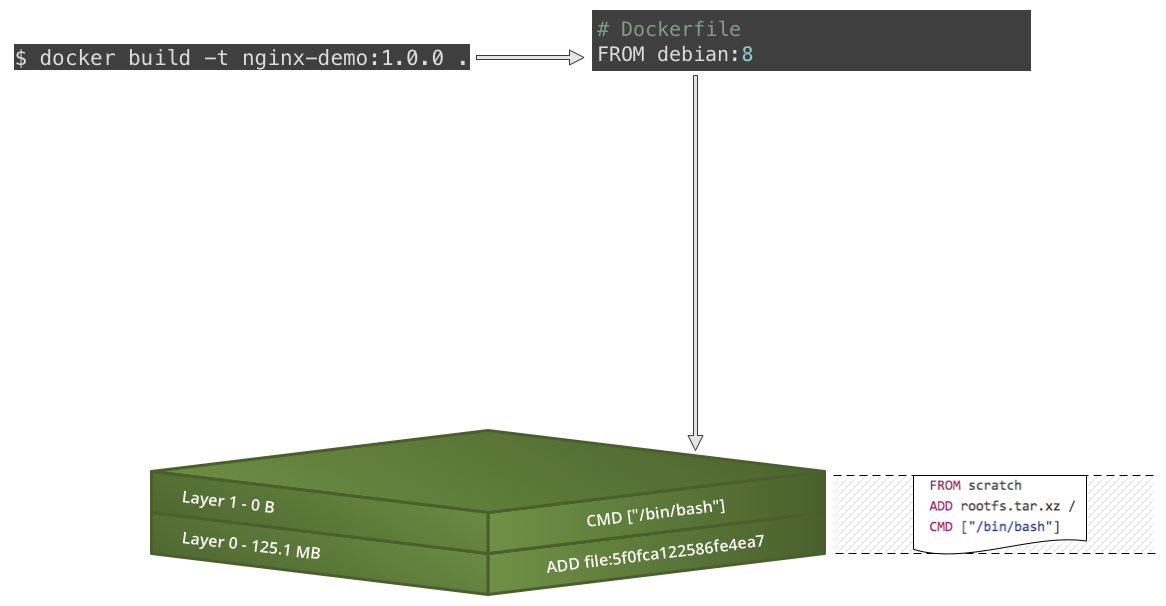

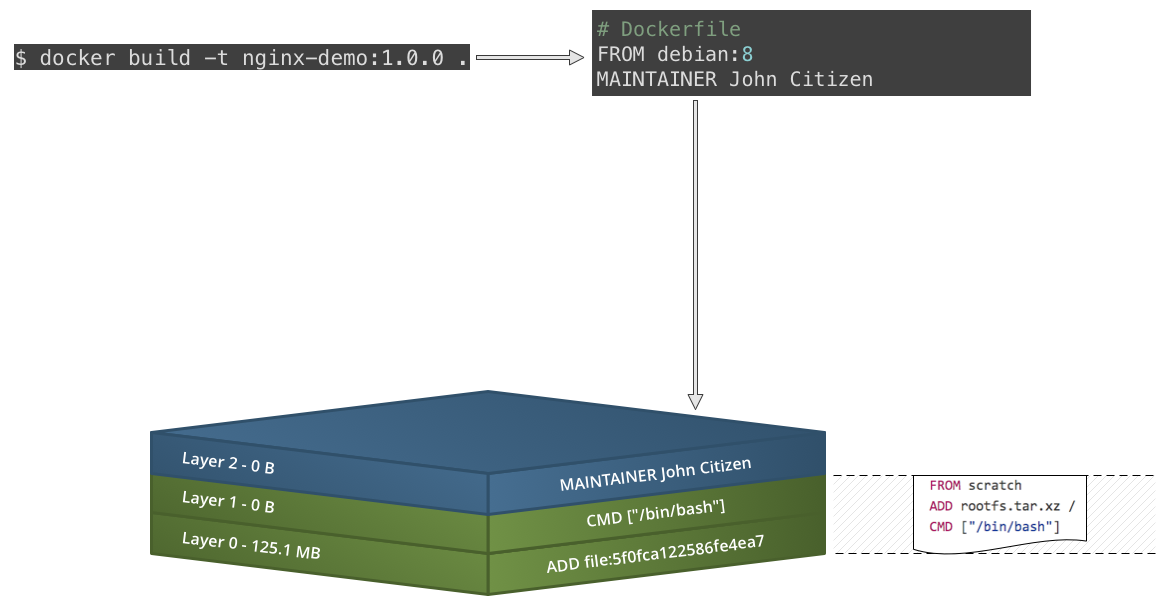

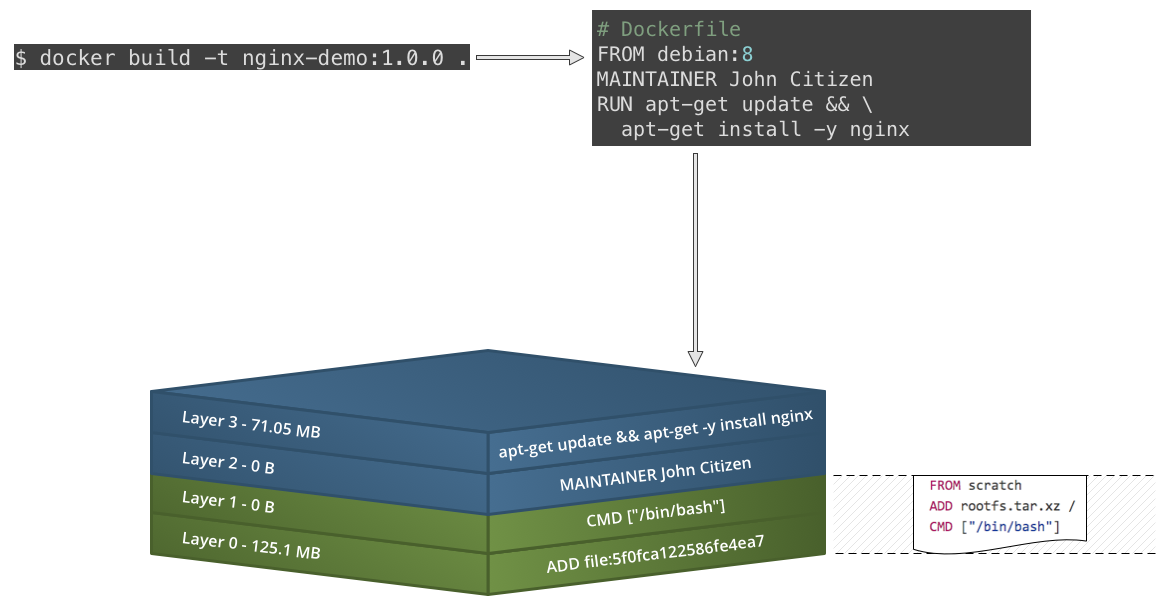

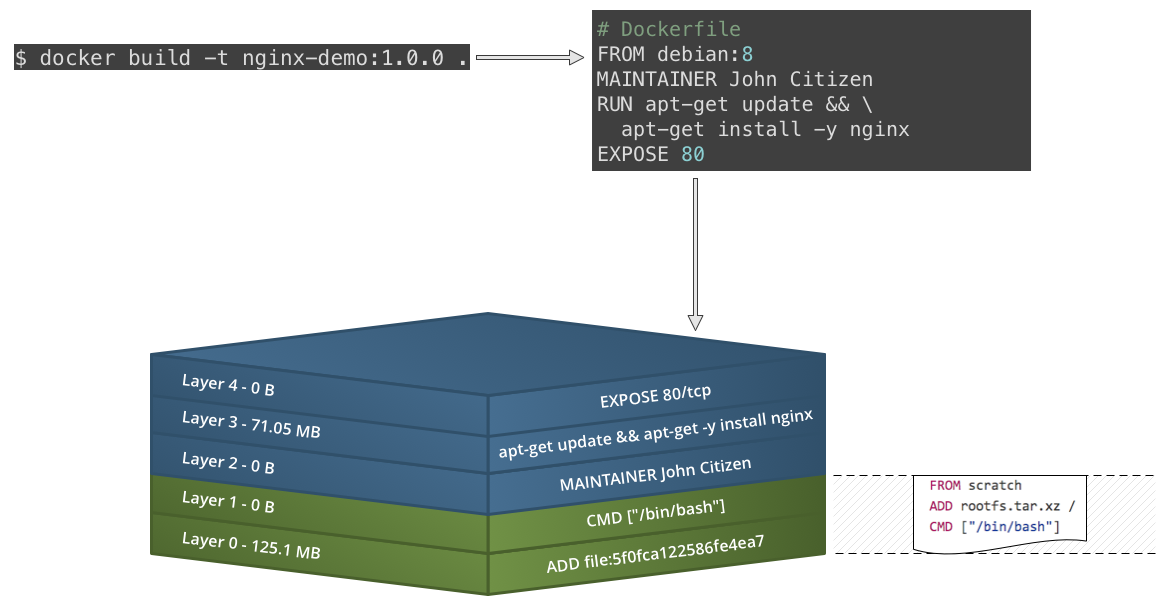

Image produced from Docker build

Slow motion replay...

Slow motion replay...

Slow motion replay...

Slow motion replay...

Slow motion replay...

Note: Docker 1.10+ no longer uses a randomly generated UUID for layers -- this is replaced by a secure content hash

Docker Registries

Introduction

- The artefact repository for images is called a Docker Registry

- You can find and retrieve images

- You can publish your own images

- You can use a publicly hosted registry or run your own private registry

Registry options

- Docker Registry 2.x (Portus, Harbor)

- Docker Hub - Default public registry used by Docker for public and private repositories

- Docker Store – Marketplace for trusted and validated images – free, open source and commercial

- Docker Trusted Registry

- Cloud hosted provide registries:

- Amazon EC2 Container Registry (ECR)

- Google Container Registry

- Azure Container Registry

- Quay.io

- Other registries:

- GitLab Container Registry, Artifactory, Nexus

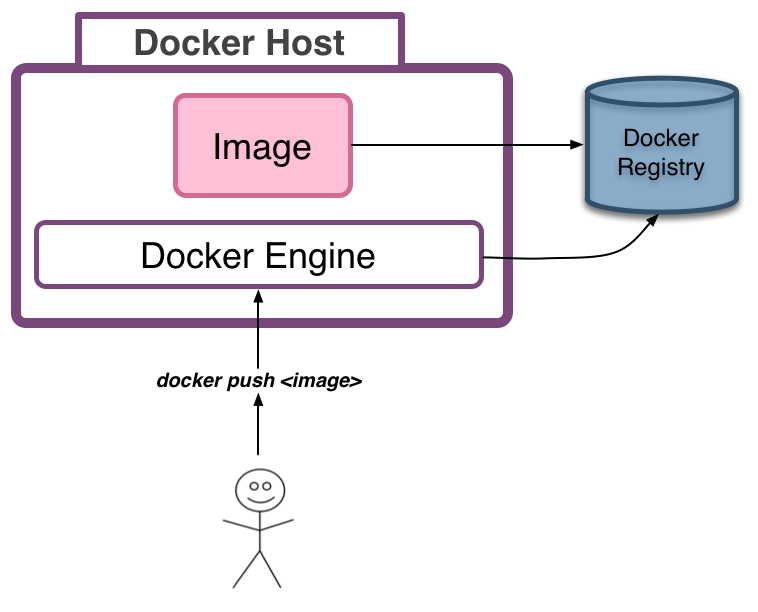

Docker Push: Publishing Images

Publishing images to a registry

- Publishing is done via the

docker pushcommand - However, Docker would attempt to push our images to the Docker Hub/Store

- To publish to our own registry we need to tag the image with the registry server location (in our case

registry.local:5000)$ docker tag nginx-demo:1.0.0 registry.local:5000/nginx-demo:1.0.0 - Now we can push the Docker image to the correct registry:

$ docker push registry.local:5000/nginx-demo:1.0.0 - We can see images in our registry at the HTTP API endpoint:

https://registry.local:5000/v2/_catalog

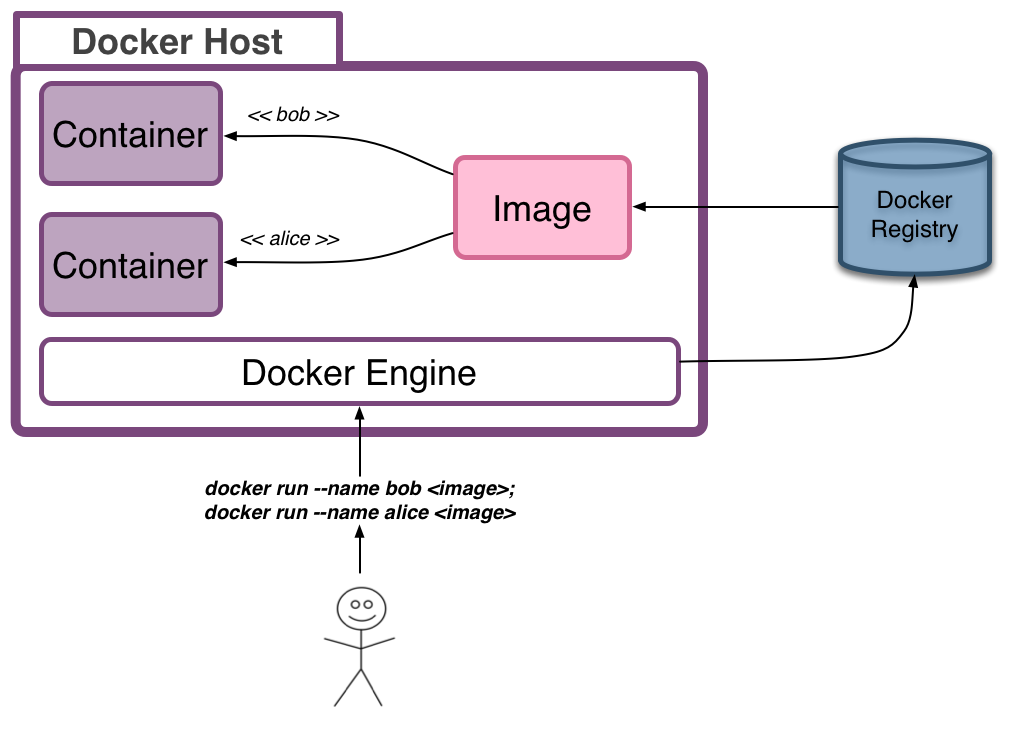

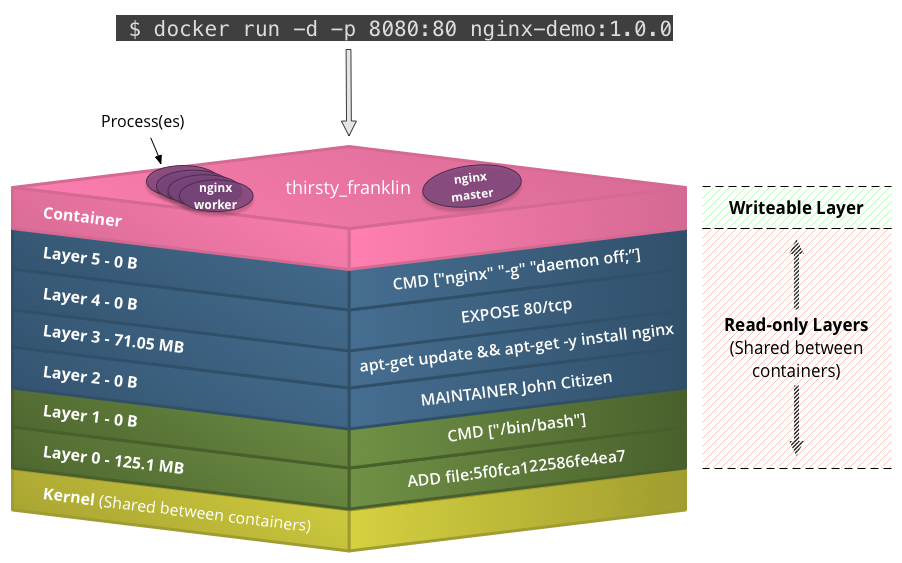

Docker Run: Deploying Images

Running Docker containers

- Containers are running instances of an image and consist of an operating system, user-added files, meta-data, and an executing process

- Containers add a writeable layer on top of an image (using a union file system) in which your application can then run and make changes

- Use the

docker runcommand to launch a container:docker run --detach --publish 8080:80 registry.local:5000/nginx-demo:1.0.0

Docker Containers

- Copy-on-write storage

- Relies on union capable filesystems (aufs, UnionFS, overlay, ...)

Docker Compose ![Docker Compose Logo]()

- Compose is a tool for defining and launching your multi-container application stacks

- You define your application stack in a file typically named:

docker-compose.yml docker-composeis a separate tool to thedockerCLI:- Version 2 of the Docker Compose syntax only supports single host deploys ands is not recommended for production use

Sample docker-compose.yml file

- Define an application stack file (

docker-compose.yml):version: "2" services: vote: image: registry.local:5000/vote ports: - "9000:80" redis: image: registry.local:5000/redis:alpine worker: image: registry.local:5000/worker db: image: registry.local:5000/postgres:9.4 result: image: registry.local:5000/result ports: - "9001:80" - Spin up this application stack:

$ docker-compose up -d - We can do other things with our stack too:

$ docker-compose logs # View container output logs $ docker-compose kill # Terminate all containers $ docker-compose down # Remove containers, networks, images, and volumes

Exercise: Docker CLI and Basics

- In this exercise we will use the Docker CLI to explore the basic Docker Build, Publish and Deploy/Run lifecycle

- Complete the exercise: 02-basics

Review Docker CI/CD Lifecycle

Build -> Publish -> Deploy/Run

Part 2 - Wrap-up

- Learnt about:

- ...basic Docker development lifecycle

- ...images and Dockerfiles

- ...Docker registries, including the Docker Hub

- ...deloying containers from images with:

docker runanddocker-compose - ...introduction to common Docker CLI commands

Part 2 - Tips and Advice

- Try to keep your Docker images small (e.g. base them on busybox, alpine, or debian base images)

- Don't use Docker containers like full blown VMs - Run a single process/service in each Docker container

- Use Docker Networks as an alternative to legacy links for communicating between containers

- Consider using OS service scripts* (e.g. Upstart, Systemd) to manage your containers (e.g. pass environment variables, keep them running, start on boot and/or in a specific order)

(*) We mean outside the container (on the host); avoid running an init system inside the container.

Part 3

Beyond the basics

Part 3

We will look at advanced concepts that are needed when deploying your Dockerized applications to production

You will learn about:

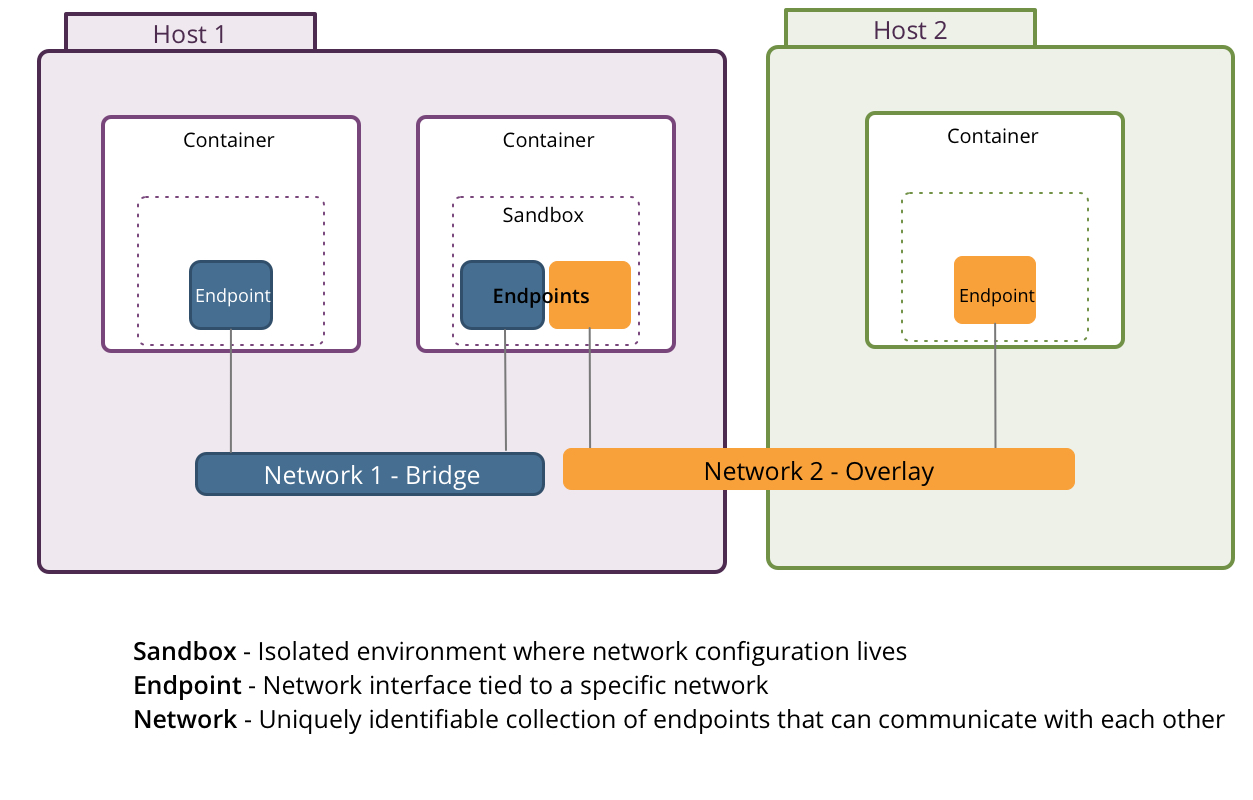

Networking

- Connect containers together

- Connect containers to a host

- Connect containers on a multi host env (overlay networks)

Docker uses virtual networks & software defined networking to:

Docker networking

For example:

$ docker network ls$ docker network [create|rm|inspect|connect|disconnect] [NETWORK]$ docker run --network=[NETWORK]Docker default networks

- None: Disable container networking

- Host: Containers IP config == Host IP config, public/direct network exposure

- Bridge(docker0): Virtual ethernet bridge that connects to containers eth0 interfaces

Multi host networks

Container-to-container communication across multiple hosts

- Grouping containers by subnet

- Better network isolation of containers

- Relies on overlay traffic encapsulation (tunneling)

docker network create --subnet=10.0.1.0/24Overlay Networks

- Overlay networks are part of User-Defined Networks

- Supports multi-host networking across Docker machine clusters

- Service discovery via embedded DNS server

- Relies on traffic encapsulation via tunnelling

- Require an external key-value store like Consul, Etcd, and ZooKeeper

Container Network Model

Where are we?

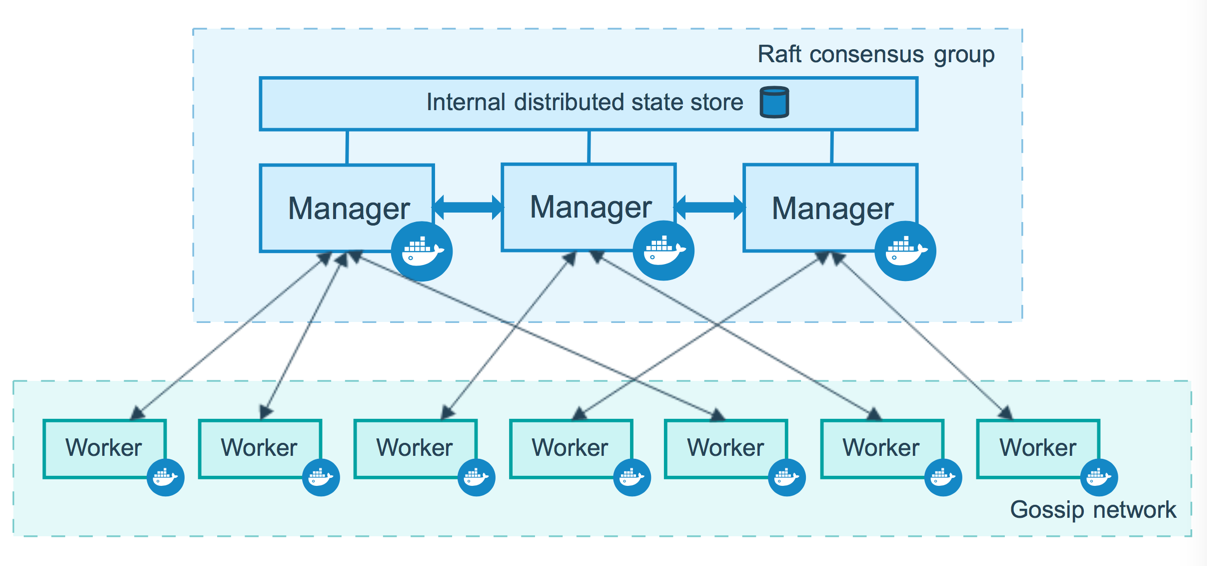

Clustering

For production

-

A real-world production environment does not usually consist of a single host running the application and services

- Reduce single points of failure in the system

- Increase availability of our applications and services

- Horizontally scale our applications and services

- Running multiple instances of each application/service behind load-balancers

- Distributing our instances across multiple hosts

We want to:

We can do this by:

Cluster concepts

- Dynamic load balancing

- Service discovery

- Desired state

- Self healing

- Auto scaling

- Automated host provisioning

Load balancing & Service discovery

Application instances and locations are not static

- Discover/register all available services of the cluster

- Dynamically reconfigure load balancer(s)

- Route every request to a registered service

- Critical component (Design for high availability)

- Service discovery: Consul + Registrator, Etcd, Kubernetes proxy

- Load balancer/Reverse proxy: Traefik, Nginx, HAProxy

Service registry / inventory

Load balancer

Tools

Desired state

Based on the reconciliation model

- Concept similar to CM tools: Chef/Puppet/Ansible

- Configuration described the desired state of infrastructure

- Cluster manager guarantees the desired state

- For example:

- Desired state: "I want at least 2 instances of my frontend

- If one of the frontend containers goes down, the cluster manager creates a new one

service to be running"

Self healing

Design for failure

- Failure detection and automated remediation based on service healthchecks

- Cluster manager checks if each service is healthy

- If yes, nothing to be done

- If not, different healing mechanisms: Service relocation / restart / replication / auto-scaling

- How long does it take to run?

$ docker -H tcp://[host]:2376 info - ...If longer than a few seconds then daemon maybe dead

- ...How will you automatically spin up a new, replacement node?

Service health

Host health

Auto scaling

Host level

- Up: CPU utilisation on a node / the cluster exceeds 80% for 15 mins

- Down: Memory utilisation on a node / the cluster is under 40% for 15 mins

- Openstack Heat

- AWS Cloud Formation / Cloudwatch alarms and triggers

- HashiCorp Terraform

Scaling up/down:

Tightly coupled with infrastructure

Need experimentation to adapt it to your applications

Auto scaling

Container level

Tightly coupled with orchestration tool:

Automated Container Provisioning

Kubernetes - Pod definition / Replication controller

apiVersion: v1

kind: ReplicationController

metadata:

name: busmeme-rc

labels:

name: web

spec:

replicas: 2 # tells deployment to run 2 pods matching the template

selector:

name: web

template: # create pods using pod definition in this template

metadata:

labels:

name: web

spec:

containers:

- image: mongo

name: mongo

ports:

- name: mongo

containerPort: 27017

hostPort: 27017

volumeMounts:

- name: mongo-persistent-storage

mountPath: /data/db

- image: minillinim/busmemegenerator

name: web

ports:

- containerPort: 3000

env:

- name: NODE_ENV

value: "production"

- name: PORT

value: "3000"

- name: BM_MONGODB_URI

value: "mongodb://localhost/app-toto"

volumes:

- name: mongo-persistent-storage

hostPath:

path: /data/db

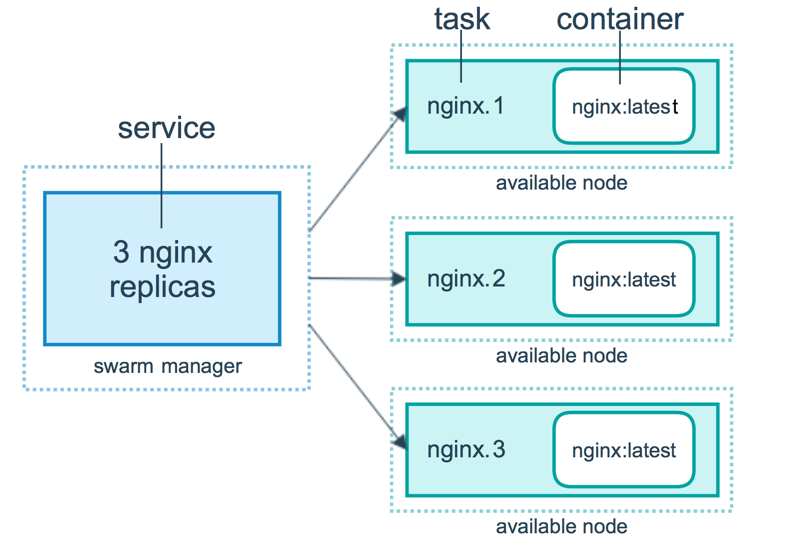

Docker Swarm mode

Cluster manager and workers

Docker Swarm mode

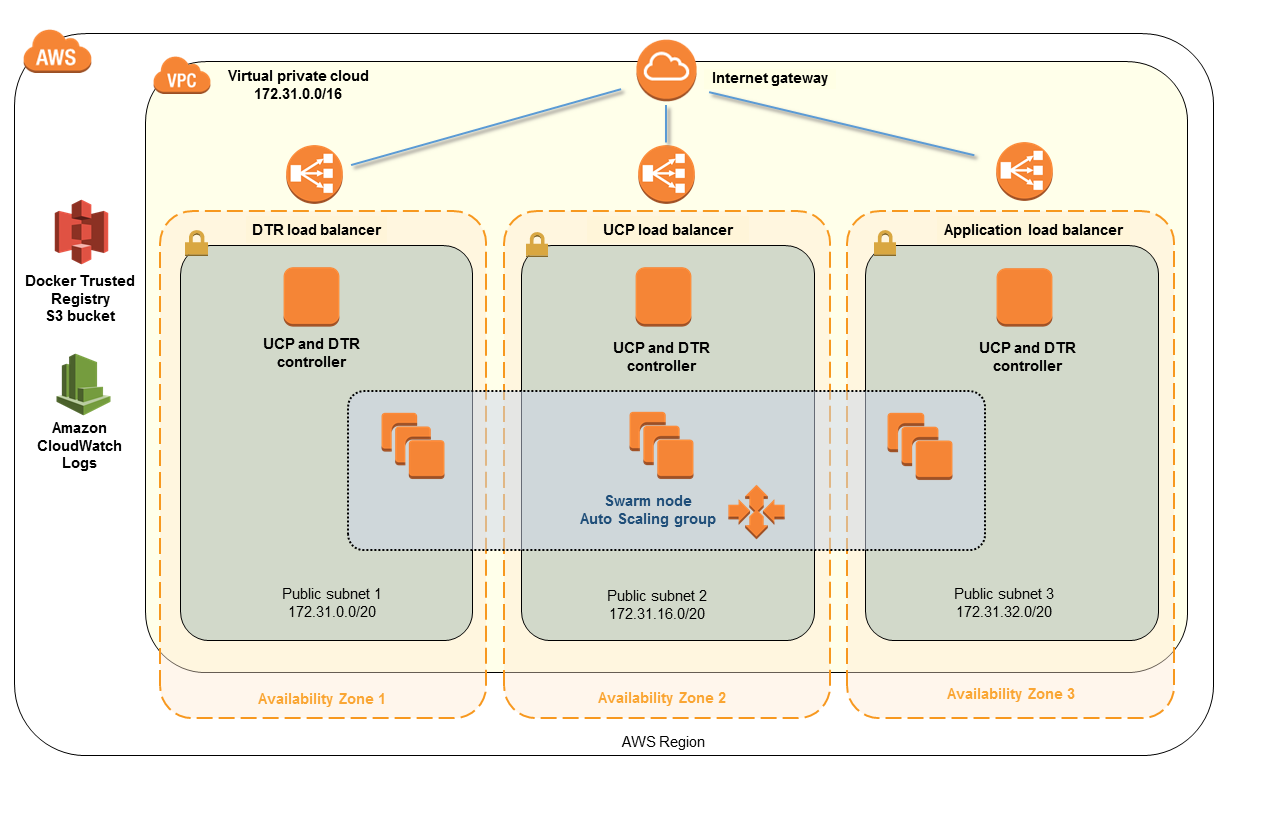

Docker EE for AWS

Docker Swarm mode

Services and Tasks

Docker Compose V3

- Docker now supports multi-host deployments with Docker Compose files via the stack concept

- Simple, consistent laptop (single host) to production workflow (Swarm mode cluster) experience

- Imperative to declarative scripts

Exercise: Clustering concepts

- In this exercise we create a Docker Swarm-mode cluster running with 3 Docker hosts

- Complete the exercise: 03-advanced-clustering

Where are we?

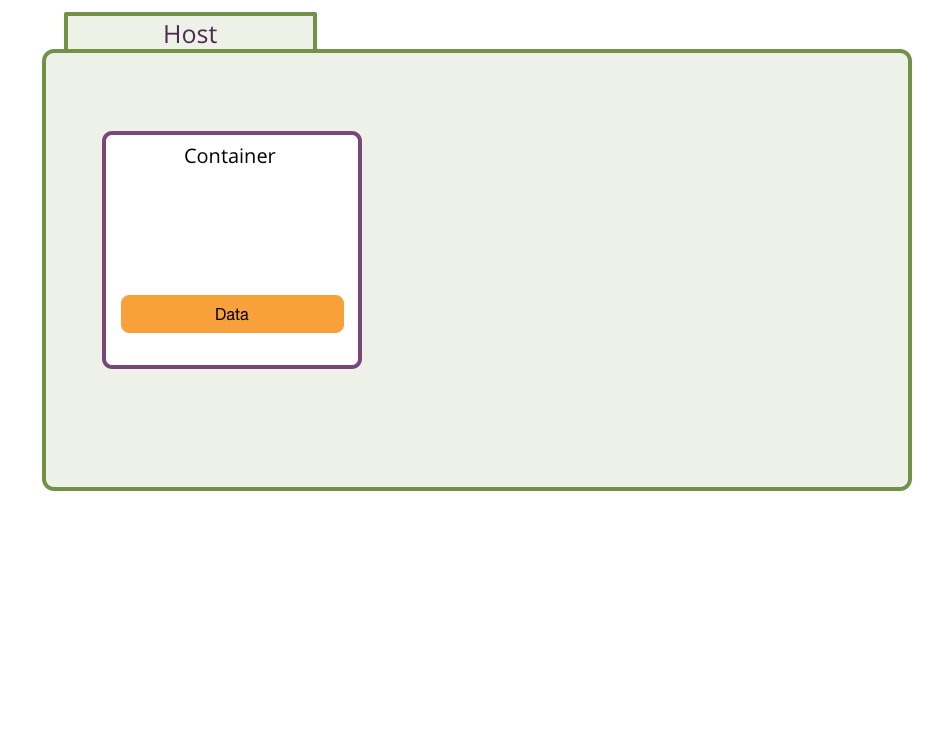

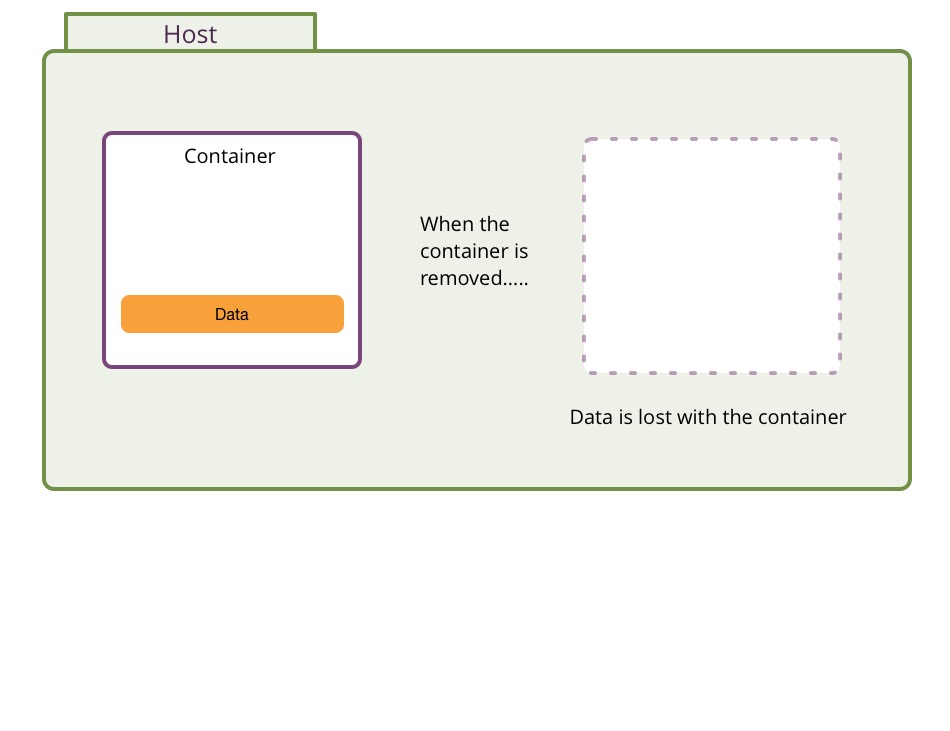

Managing data in a container

Managing data in a container

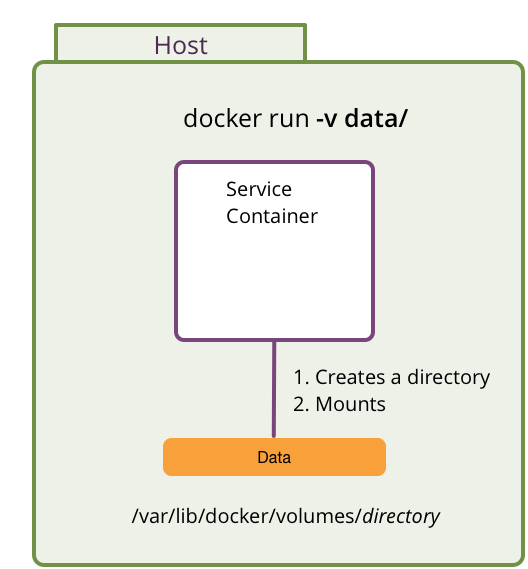

Data Volumes

- Directories/Files outside of the Union File System

- Normal directories on the host file system

- I/O performance on volume is exactly same as I/O on host

- Volume content is not included in the docker image

- Any change to volume content (via RUN) is not part of image

- Can be shared and reused across containers

- Persists even if the container itself is deleted

Data persistence across Docker hosts

Distributed filesystems

- Data can be shared and reused across hosts

- NFS / GlusterFS / Ceph / Flocker

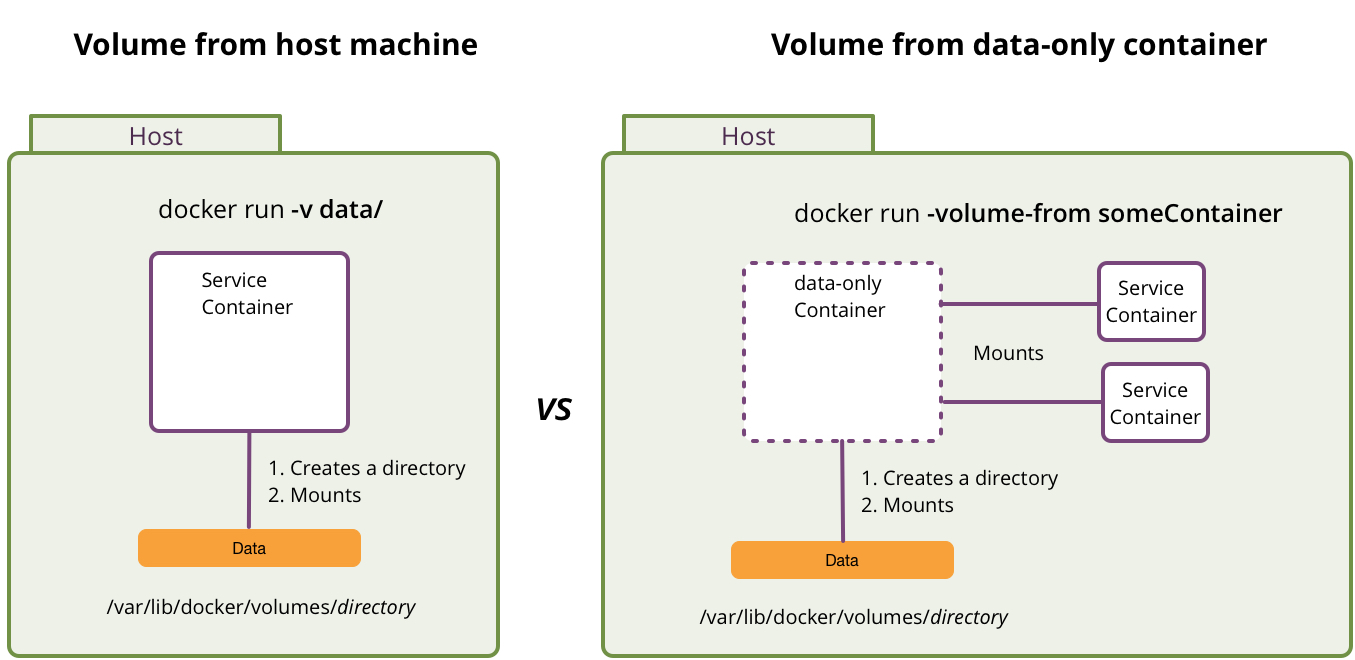

Volumes mounted from host

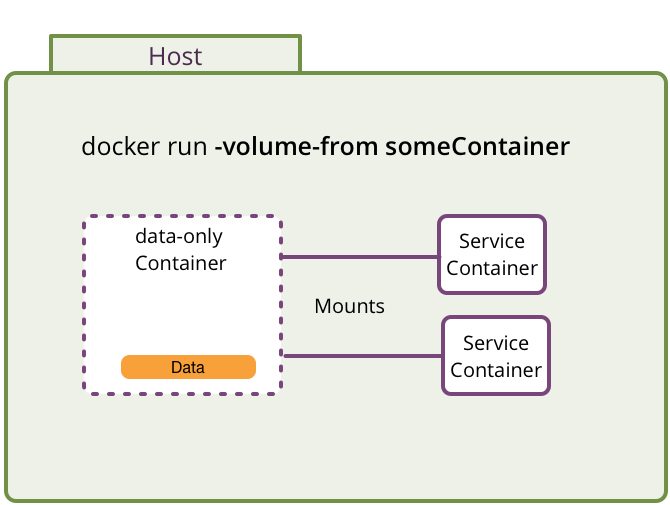

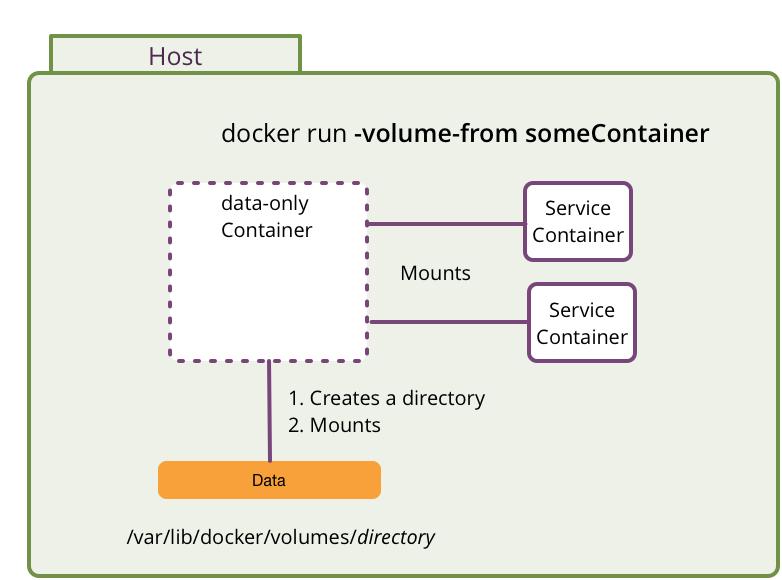

Volumes mounted from other containers (data-only containers)

Volumes mounted from other containers (data-only containers)

Volumes mounted from host vs data-only containers

Possible mount points as Data Volumes

- Host file

docker run -v ~/.a-file:/destinationocker run -v /src/:/destinationdocker run --volume-driver=flocker -v a-named-volume:/destination docker create -v /some-data --name store docker run --volumes-from store Where are we?

Security aspects

Containers reduce attack surfaces and isolate applications to only the required components, interfaces, libraries and network connections

Aaron Grattafiori, NCC GroupDocker security efforts

- Docker is part of the Vendor Security Alliance (VSA)

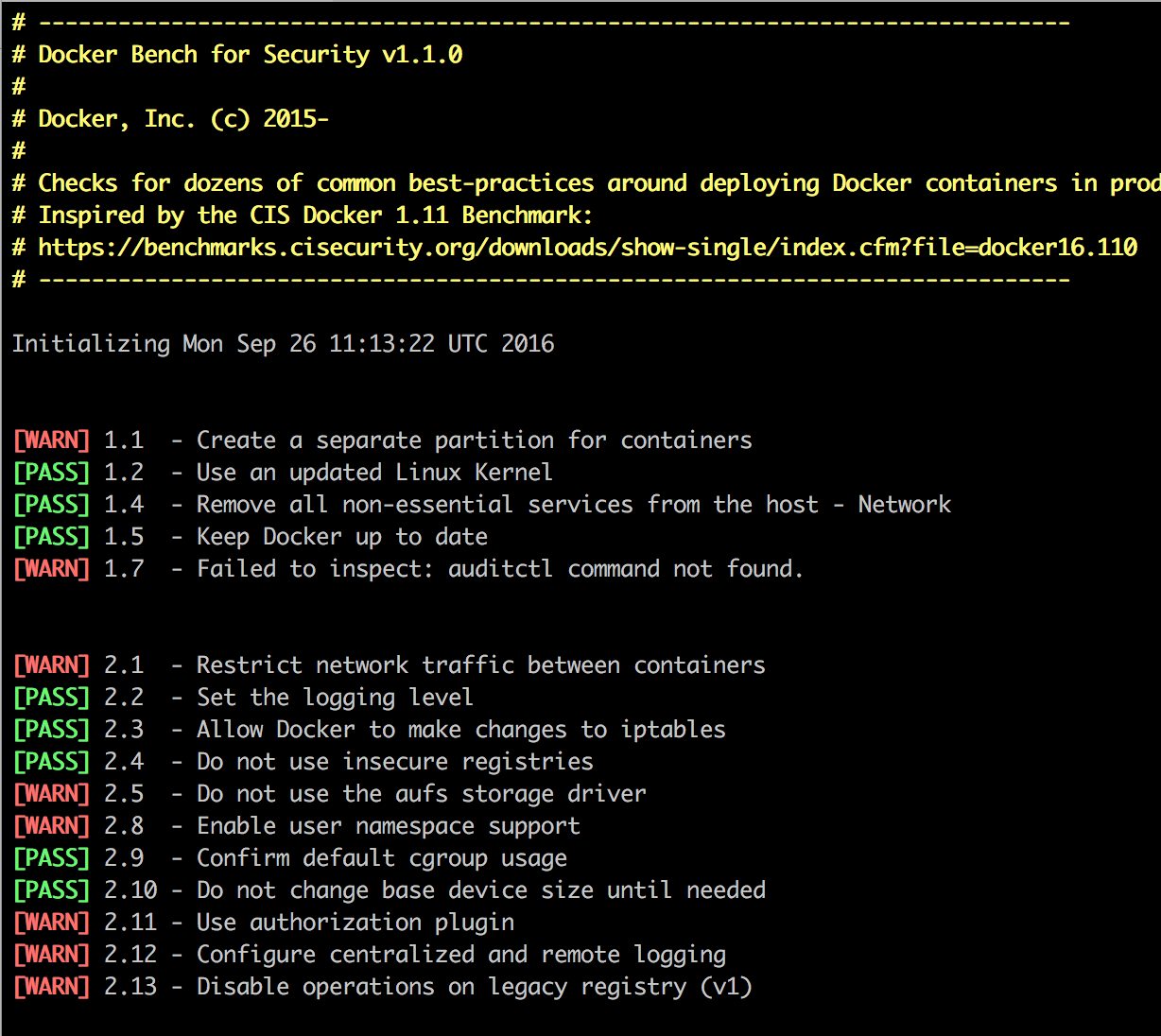

Members include: Docker, Uber, Dropbox, Palantir, Twitter, Square, Atlassian, Godaddy and Airbnb - Docker Security Bench

Docker security benchmark

Security levels

- Docker daemon configuration

- Docker registry

- Images

- Container-to-host security

- Container-to-container security

- Container security

- Secrets management

Docker daemon security

Mitigate container breakouts

- Host machine should run only the essential services

- Disable default inter-container communication

daemon docker --icc=false - Daemon user namespace options

- User Namespaces (>1.10) allows the root user in a container to be mapped to a non uid-0 user outside the container

Docker registry security

- Do not use insecure registries

- Use TLS/SSL communication with Docker clients

- Enterprise-class authentication: AD/LDAP support

- Role based access control: Who is able to pull or push images?

- Tools on top of Docker registry:

- SUSE Portus

- VMWare Harbor

- Redhat Satellite 6

Image security

- Build your own images if possible

- Use only trusted base images from the Docker Hub / Store

- Use SHASUM check, Docker Trusted Registry, or setup Notary

- Rebuild the images to include security patches

- USER instruction to set UID to use when running the image

- Avoid large distributions (Ubuntu, CentOS) / prefer minimal distributions: Alpine, BusyBox, SCRATCH

- Reduces attack surface

- Less patching requirements

- Download time decreased

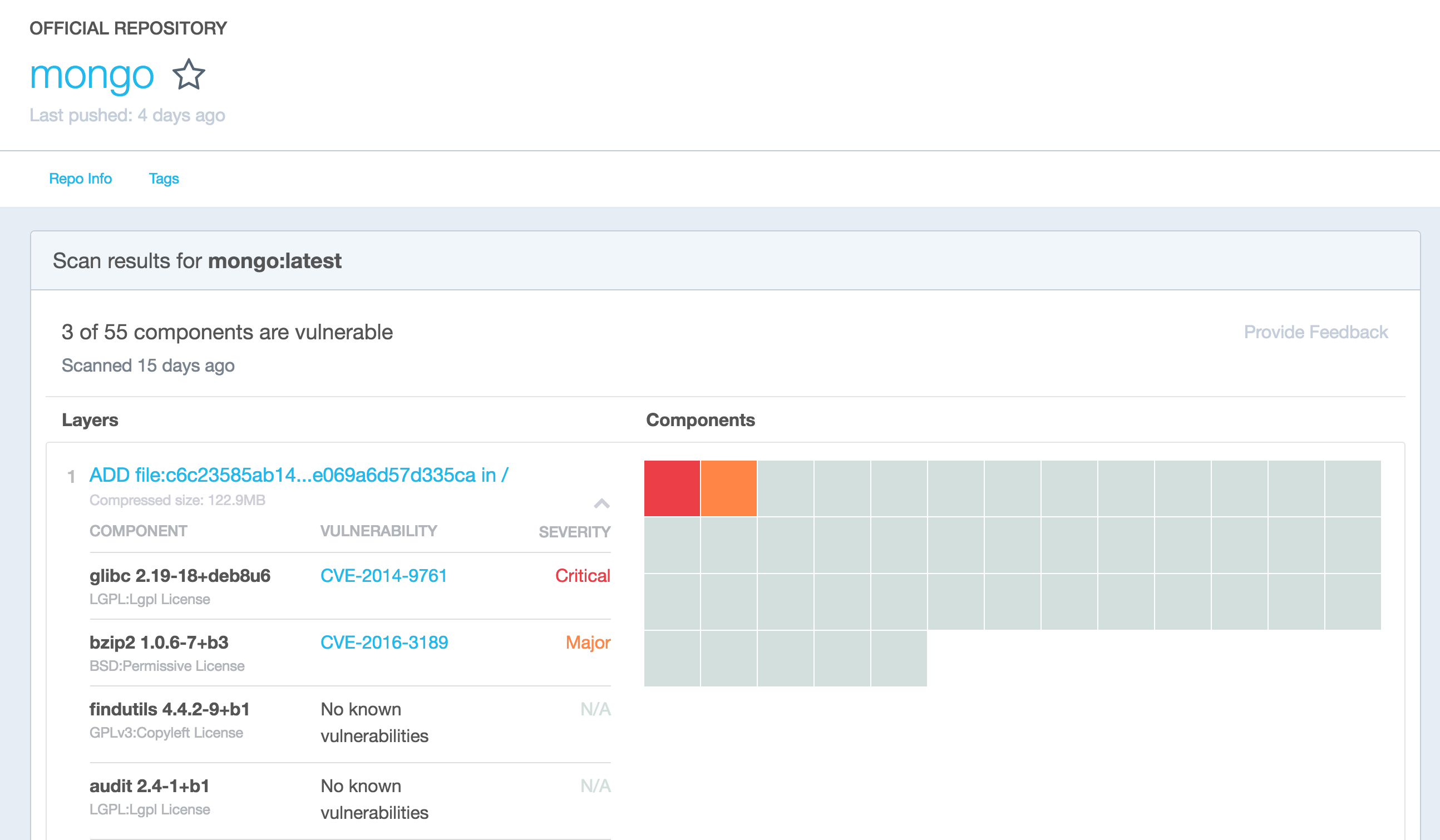

Docker vulnerability scanner

Source Docker Hub (Must be logged in)

Container-to-host security

- Since Docker 1.11, number of active processes running inside a container can be limited to prevent fork bombs

- This requires a linux kernel >= 4.3 with CGROUP_PIDS=y to be in the kernel configuration

$ docker run --pids-limit=64Container-to-container security

Least access strategy

- Restrict incoming traffic to the right host interface

$ docker run --detach --publish [ip]:[source-port]:[dest-port] [image]$ docker run -d --read-only [image]$ docker run -d -v /src/webapp:/webapp:ro [image]Container security

- Mandatory Access Control (MAC)

- Create your own SELinux, AppArmor profiles, Seccomp profiles

- Reduce kernel capabilities

- Use non privileged containers

Secrets management

Where are we?

Monitor process / resources usage

Some useful tools to monitor containers:

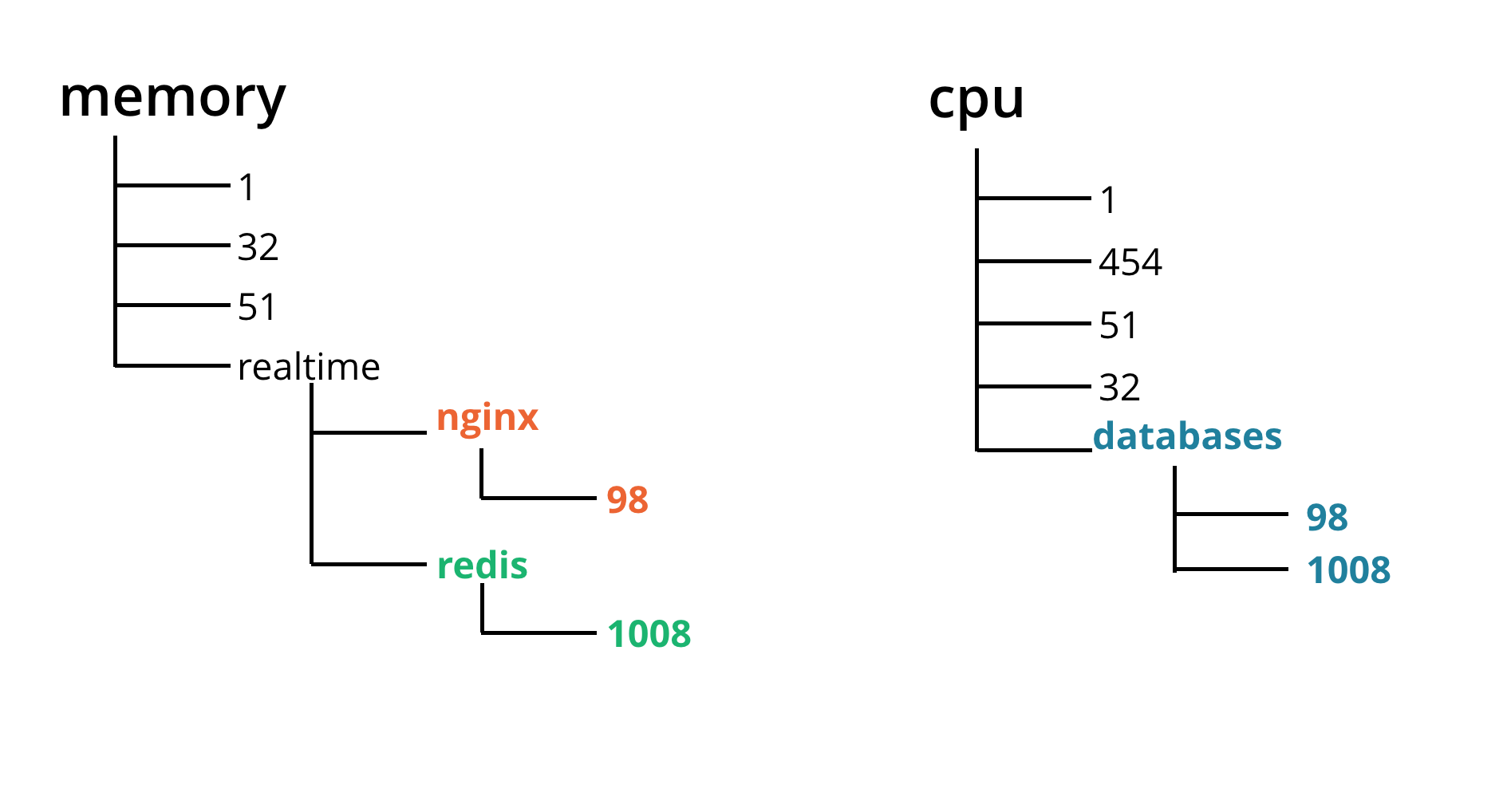

$ docker top$ docker statsControl groups & Namespaces

- Resources isolation with cgroups: CPU, Memory, Block I/O, Network, Devices

- Processes isolation with namespaces

Control groups

Namespaces

Resource isolation - control groups

Namespaces

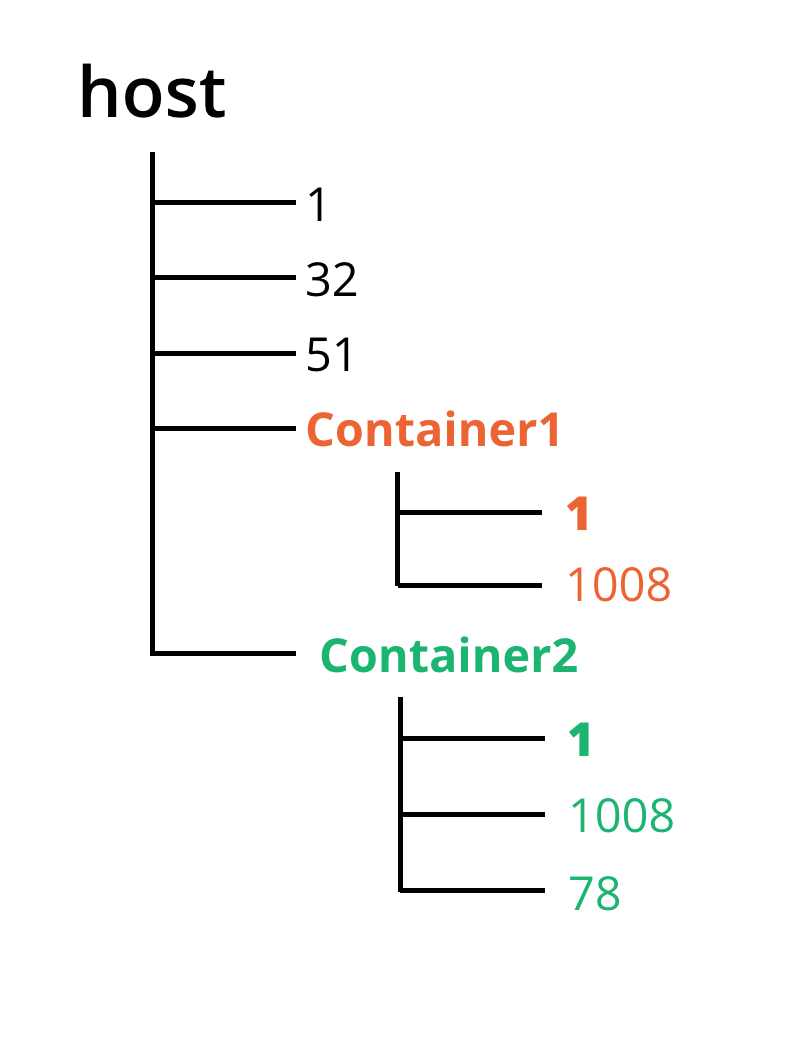

- Limit the resource visibility

- Processes with their own view of the system

- pid: Each PID namespace has its own numbering (parent PID 1)

- net: Define network interfaces / rules

- mnt: Provide its own rootfs (~chroot)

- user: Map processes GID/UID to host GID/UID

- uts: Hostname and NIS domain name

- ipc: System V IPC, POSIX message queues

Process isolation - namespaces

Exercise: Monitoring

- In this exercise we monitor the Voting App container with cAdvisor

- Complete the exercise: 03-advanced-monitoring

Where are we?

Logging

Why is it important?

- Ephemeral nature of containers implies loss of context: where/for how long/which containers are running?

- Log correlation between containers

- Need for live analysis

- Retention of information in case of catastrophic events

Centralised logging

Analyze cluster-wide issues

- Docker daemon logs

- Centralized and remote containers logging

- Containers lifecycle / Exit signals

- Health check / Restart policies

Container logs

Log correlation / log content is important

- Timestamp with time zone (Ideally UTC)

- Docker host FQDN/IP

- Container ID

- Image name and tag

Logging strategies

Depends on OS / existing log strategy / centralized logging solution

- Default logging driver: json-file

- Other options: journald, fluentd, splunk, awslogs

- Log collectors as containers and host log forwarding: logspout, sumologic

Exercise: Logging

- In this exercise we use Fluentd to collect and store Docker containers' logs

- Complete the exercise: 03-advanced-logging

Where are we?

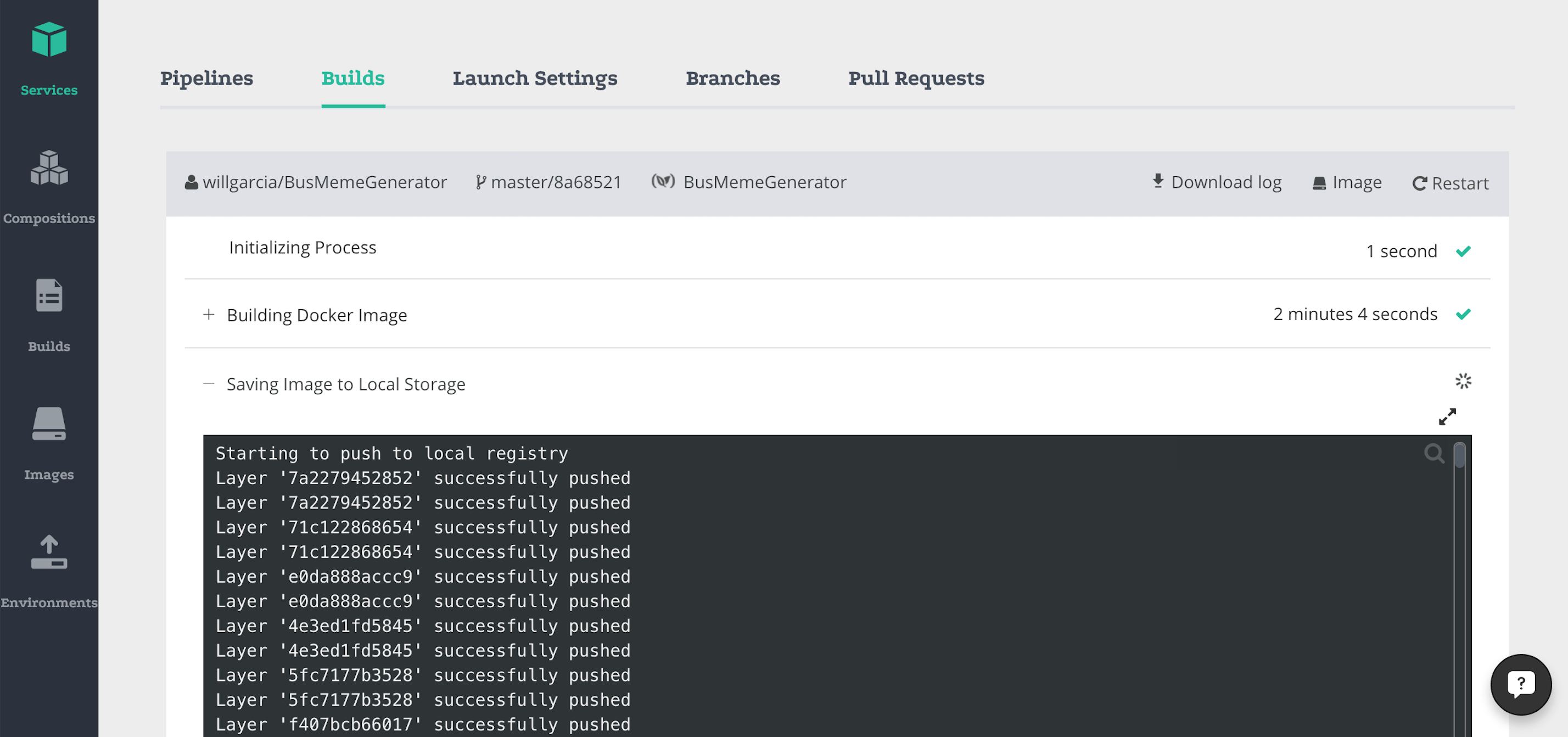

Continuous Integration

Automated build

Build images on git repository events: push, tags

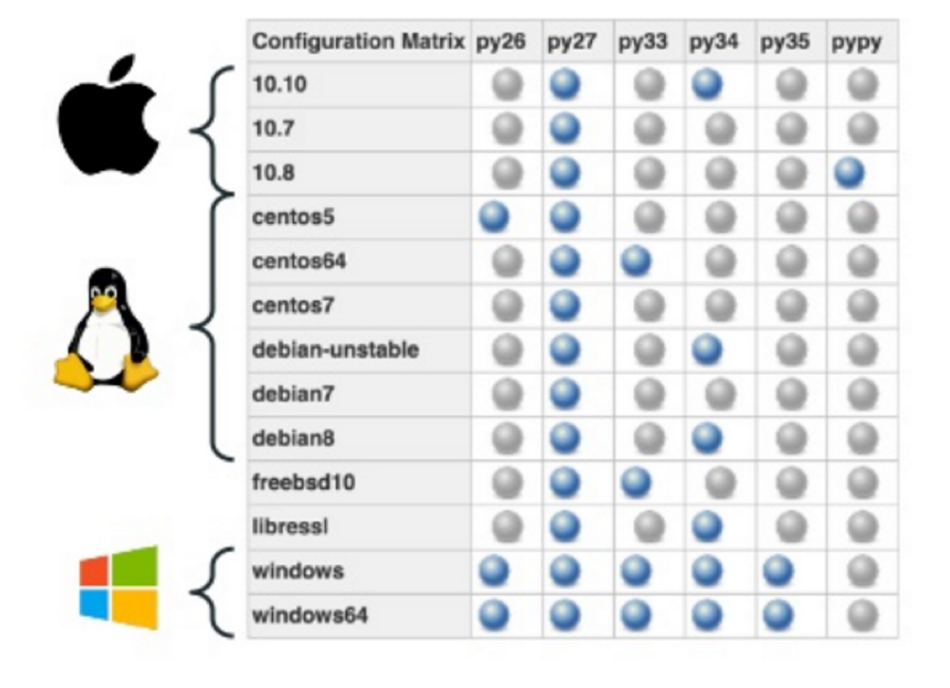

CI: Matrix testing

Refactoring / upgrades made easier

- Use Docker for regression testing or to test new OS/versions

- Run tests in parallel

For example, when you want to run tests like this:

CI: Container usage tips

- Dev/prod parity: Build once, deploy everywhere

- Do not bundle dev/test dependencies in your images

$ docker run myprod:image sh -c "npm install --dev && npm test" - Include project/build number in Docker image tags

$ docker tag imageName ${imageName}:${version}.${buildNumber} - Use volumes to keep your test reports outside of containers available after tests

- Use volumes to cache files and reduce testing times (software dependencies are good candidates: npm, m2, composer, etc)

CI: Dockerfile/image quality checks

Lint your Dockerfile

- Good images respect simple but important principles

- Hadolint -- Dockerfile linter

- Useful rules:

- DL3002: Do not switch to root USER

- DL3007: Using latest is prone to errors if the image will ever update

- DL3020: Use COPY instead of ADD for files and folders

CI: other considerations

- Create a separate partition to extend disks

- Frequently run docker-gc to remove old containers/images/volumes

Part 3 - Tips and Advice

- Volumes != Persistance

- Volumes are not garbage collected

- It is ok to use same images for stateful (database) container and its corresponding data-only container

- Use load balancers (like HAProxy) to scale your services

- Put containers with sensitive data on their own host

- Ensure your services provide healthcheck endpoints so that load balancers can stop routing traffic to unhealthy instances

- Cache images whenever possible, download takes a long time

Part 3 - Wrap-up

- Learnt about:

- Cluster concepts

- Networking, Storage, Security

- Resource management / Monitoring

- Logging

- CI/CD, Testing

Part 4

Platforms

Which platform should I chose?

It depends!

Docker CE

- Community edition

- ASsembled from upstream components in Moby project

Docker EE

- Enterprise version of CE

- Commercial support

- Certified components, containers and plugins

- Docker Trusted Registry (DTR), RBAC, LDAP/AD integration

- Docker Security Scanning, Multi-tenancy

Docker Cloud

- Cloud based continuous delivery

- Automated builds, testing, security scanning

- Teams and organisations

- Hosted registry (Docker Hub)

- Provision and manage swarms -- Swarm mode

Docker for Mac/Windows

- More native experience that than Docker Toolbox

- Integrates with Docker Cloud (Swarms)

- More efficient use of resources (xhyve, Hyper-V) - no need for Virtualbox and Docker Machine

Docker for AWS/Azure/GCP

- Fully-baked, opinionated, tested setup for cloud providers

- Deploy via templates for CloudFormation, Resource Manager, Deployment Manager

Kubernetes

- The most widely adopted container orchestration platform

- Designed around Google’s internal experience of operating containers at scale

- Helm – Kubernetes Package Manager

- IBM BlueMix Container Service

- Google Container Engine (GKE)

- Azure Container Service

- Rancher (with Kubernetes backend)

- Deis Workflow (Heroku-12 factor-like PaaS built on top of Kubernetes)

Cloud offerings

Kubernetes

AWS options

- Managed container scheduling and orchestration

- Uses AWS resources under the hood (EC2, ALB, IAM, etc.)

- Only works on AWS

- Simplified, opinionated infrastructure setup on AWS

- Single container – EC2

- Multi-container – ECS

AWS EC2 Container Service (ECS)

AWS Elastic Beanstalk

Docker CE/EE for AWS (discussed earlier)

Part 5

Next steps, future trends and Q&A

Next steps after this tutorial

Congratulations! You've made it to the end :)

What now?

- Go back over the tutorial material on your own:

- Review scripts, configuration, source code for the app and services, etc.

- Read through the linked references in the slides and READMEs

- Try the suggested Homework in the READMEs

- Experiment!

Adopting Docker In Your Own Organisation?

- Think about Dev & Test first...

- Then focus on getting one app or service into production!

Docker for Dev & Test

- Consider using it as a way to create prod-like environments on developers' laptop

- Use it in your CI process to build up and throw away instances of your app

- Run your build tool chain from within containers too!

Your first app...

- Once you're happy with Dev & Test, get an app into prod!

- Start with something low risk, and ideally stateless, but that does have a good change frequency

- Sit with it a bit on prod before you take the next steps

Other Advice

- Get log aggregation and monitoring in place early

- Only look at a cluster management once when you understand Docker and its edge-cases properly

- You may not need advanced schedulers like Mesos and Kubernetes for your Enterprise apps just yet - but it's worth understanding what they provide to know if you need them or can just get away with swarm-mode and your own scripts

Embrace the Docker mindset!

Really make sure the Docker mindset is understood - otherwise you may end up fighting the tech, so...

- One app/service per container

- Images as build artifacts

- Immutable servers!

Future Trends?

- Let's take a look...

Cluster management, scheduling and orchestration

- DC/OS (Mesos, Marathon, and more)

- Kubernetes

- Swarm (now swarm-mode)

- Nomad

Hosted Container-As-A-Service (CaaS) Offerings

- AWS ECS

- Hosted Kubernetes:

(Google Container Engine, Tectonic, OpenShift Online) - Docker Cloud (formerly Tutum)

- Joyent Triton

- Heroku

- AWS Lambda (Functions as a Service that run in containers)

Self-hosted Container-based CaaS/PaaS

- Deis Workflow (Kubernetes)

- Rancher (Kubernetes, Mesos, Swarm, Cattle)

- Docker Datacenter (On-premise, also offered by AWS)

- CloudFoundry (Pivotal, IBM, etc.)

- Red Hat Openshift Container Platform

Short-lived Containers!

- Spin up containers on demand, perhaps for specific users

- Google do this all the time!

- Can be a great way to reduce resources required to run your system...

- ...and keep process/data isolated...

- ...and improve security...

- ...but you'll have to do some work yourselves to make this work!

- Unless you can use something for this purpose, like...

- ...AWS Lambda (or Azure Functions, Google Cloud Functions)

- ...aka "Serverless" computing

Windows!

First-class Docker support on Windows Server with Windows Server Containers

Storage

"Storage is hard" [1]...but, still a lot going on in this area.

Selection of Volume Plugins

- Flocker (ClusterHQ):Volume portability, supports many backends

- netappdvp (NetApp): iSCSI/NFS on NetApp storage

- blockbridge-docker-volume (Blockbridge): Vendor-specific

- Netshare (ContainX): NFS, AWS EFS & Samba/CIFS

- REX-Ray (EMC): AWS EBS, GCE, Cinder, EMC, VirtualBox

- Contiv Storage [2] (Cisco): Ceph, NFS

- Convoy (Rancher): Volume backup/restore, snapshot, migration

- Open Storage: Stateful services in multi-host Docker environment

[1] Brian Goff, Core Engineer, Docker

[2] Contiv Storage is still in alpha phase

Networking

Overlays

- Weave Net: Network plugin supporting VXLAN, Service Discovery (DNS), Encrypted traffic (IPSec), Load Balancing

- libnetwork: Core Docker library supporting driver / plugin model (built-in Overlay needs external KV-store)

- Swarm Mode Overlay: New in Docker Engine 1.12

Alternatives

- Calico: Can be deployed without encapsulation or overlays to provide high performance at massive scales

- Contiv Networking: Pluggable networking alternative to built-in Docker, Kubernetes, Mesos, and Nomad ecosystems

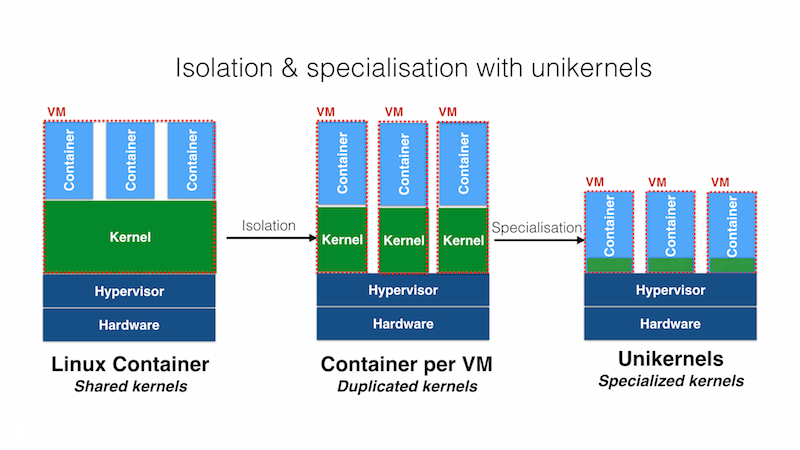

Unikernels!

- Allows you to build specific purpose kernels for a given process

- Promise to be even leaner than containers...

- And provide better isolation, as the kernels are separate

- Could provide the speed/lightweight nature of containers, with the improved separation of VMs

- But tooling in this space is where it was for containers several years ago, although...

Unikernels vs Containers

Image source: http://roadtounikernels.myriabit.com/pictures/unikernel.png

Unikernels on Docker!

- Docker, inc., clearly sees unikernels as an important technology...

- ...see press release:

"Docker Acquires Unikernel Systems to

Extend the Breadth of the Docker Platform" - ...and the early proof-of-concept demo [1]

- ...and some useful results that are starting to appear [2]

[1] Docker Online Meetup #31: Unikernels

[2]

Improving Docker With Unikernels: Introducing HyperKit, VPNKit And DataKit

Docker Ecosystem

WARNING: While the core Docker system is mature, the wider ecosystem is still undergoing lots of change. The options around scheduling tools, platforms, etc., based on top of Docker are changing rapidly!